Microsoft Phi-4 Mini: The Definitive Research Report on Reasoning SLMs, Architecture, and Copilot+ Integration

1. Introduction: Phi-4 Mini — The “Hype” and Reality of 2025

The trajectory of artificial intelligence development has undergone a profound bifurcation in 2025. For the better part of the previous decade, the dominant narrative was governed by the scaling laws of deep learning, which posited a direct, immutable correlation between model performance and parameter count. The industry obsessed over “frontier models”—behemoths with hundreds of billions, eventually trillions, of parameters requiring datacenter-scale energy and compute. However, a counter-narrative has steadily gained momentum, culminating in the release of the Microsoft Phi-4 mini. This model represents not merely a downsizing of existing technology but a fundamental rethinking of how intelligence is encoded in neural networks.

The “hype” surrounding Phi-4 mini in 2025 is substantial, but unlike the speculative bubbles of previous tech cycles, this enthusiasm is grounded in tangible architectural breakthroughs and a desperate market need for on-device AI. The centralized cloud inference model, while powerful, faces insurmountable barriers regarding latency, privacy, and economic scalability for ubiquitous, always-on applications. The industry’s gaze has thus turned to the edge—to the laptops, smartphones, and embedded devices where data is generated and where users live.

1.1 The Pivot to High-Density Intelligence

The central thesis driving the Phi-4 family is that data quality can substitute for parameter quantity. This concept, often termed “data-centric AI,” suggests that a model trained on “textbook-quality” data—curated, synthetic, and reasoning-dense—can outperform significantly larger models trained on the noisy, unstructured chaotic data of the open web.1 Phi-4 mini, with its 3.8 billion parameters, is the ultimate manifestation of this philosophy. It does not attempt to memorize the internet; instead, it attempts to internalize the processes of reasoning.3

By achieving benchmark scores on complex math and reasoning tasks (such as AIME and GPQA) that rival models ten to twenty times its size, Phi-4 mini validates the hypothesis that reasoning is a learnable capability independent of massive scale.5 This realization has triggered an “arms race” in the Small Language Models (SLM) sector, where the goal is no longer to build the biggest brain, but the most efficient one.

1.2 The Context of 2025: The Edge AI Imperative

In 2025, the hardware landscape has finally caught up with the software aspirations. The proliferation of Copilot+ PCs—computers equipped with dedicated Neural Processing Units (NPUs) capable of 40+ Trillion Operations Per Second (TOPS)—has created a hardware install base hungry for optimized software.7 A 3.8-billion parameter model is strategically sized to fit comfortably within the VRAM of a discrete GPU or the unified memory architecture of a modern SoC (System on Chip) while leaving sufficient resources for the operating system and other applications.

This report will conduct a forensic analysis of the Phi-4 mini ecosystem. We will dissect the architectural innovations of the Phi-4-mini-flash-reasoning variant, exploring its hybrid SambaY architecture that blends the best of Transformers and State Space Models.9 We will examine the economic implications of shifting from cloud-based Large Language Models (LLMs) to local SLMs, detailing the cost savings in inference and energy. Furthermore, we will investigate the integration of these models into the Windows ecosystem through the legacy of Phi Silica, and their broader potential in the Edge AI and IoT markets.

Curious how on-device AI will feel on next-gen hardware? After exploring Microsoft Phi-4 mini, check how dual-screen and foldable laptops change creative workflows in our comparison of Lenovo Yoga Book 9i vs Huawei MateBook Fold here: https://laptopchina.tech/lenovo-yoga-book-9i-vs-huawei-matebook-fold/ and imagine Phi-4 mini running on these form factors in real life today.

2. Architecture: Redefining the 3.8B Parameter Envelope

To understand the capabilities of Phi-4 mini, one must look beyond the topline parameter count and examine the internal architecture and training methodology that allow 3.8 billion parameters to punch so far above their weight.

2.1 The Core Architecture: Dense Decoder-Only Transformer

The standard Phi-4-mini-instruct model adheres to the proven dense decoder-only Transformer architecture, the same lineage that gave rise to the GPT series and Llama.10 However, within this familiar framework, Microsoft has implemented critical optimizations to maximize information density.

- Parameter Count: At 3.8 billion parameters, the model occupies a “Goldilocks” zone. It is significantly more capable than the 1-2 billion parameter “nano” models often used for simple text prediction, yet it remains light enough to run on consumer hardware with 8GB or even 4GB of RAM (when quantized).

- Vocabulary Size: The model features a vocabulary of 200,000 tokens.11 This is a substantial increase over typical models (often 32k or 50k). A larger vocabulary means that the model can represent complex concepts, code syntax, or multilingual terms as single tokens rather than fragmented sub-words. This improves encoding efficiency, effectively expanding the context window’s utility and speeding up inference, as fewer tokens are needed to express the same amount of information.

- Shared Input-Output Embeddings: To conserve parameters and memory bandwidth, Phi-4 mini utilizes shared input and output embeddings.11 This technique, while not new, is crucial in constrained environments, ensuring that the limited parameter budget is spent on the deep reasoning layers rather than redundant embedding matrices.

2.2 Context Window and Attention Mechanisms

The standard Phi-4 mini supports a massive 128,000 token context window.10 This capability is transformative for edge applications. It allows a user to load an entire novel, a comprehensive legal contract, or a significant portion of a software codebase directly into the model’s working memory on a laptop.

- Grouped-Query Attention (GQA): To manage the computational cost of such a large context, the model employs Grouped-Query Attention.11 GQA strikes a balance between the quality of Multi-Head Attention (MHA) and the speed of Multi-Query Attention (MQA). By grouping query heads, it significantly reduces the size of the KV (Key-Value) cache, which is often the bottleneck for long-context inference on memory-constrained devices.

2.3 The Training Curriculum: The “Textbook” Approach

The “secret sauce” of the Phi series remains its data curation. Microsoft Research has doubled down on the use of synthetic data generated by larger “teacher” models.2

- Data Volume: The model was trained on 5 trillion tokens.10 While smaller than the datasets used for frontier models (which exceed 15T+ tokens), the quality of these tokens is orders of magnitude higher.

- Reasoning-Dense Data: The training set is heavily weighted towards “reasoning-dense” content—logic puzzles, step-by-step mathematical solutions, and heavily commented code. The hypothesis is that exposure to the process of solving a problem is more valuable than exposure to the answer.

- Filtering: Rigorous filtering of organic web data removes the “noise” of the internet—conversational filler, incoherent text, and factual errors—leaving a distilled dataset that resembles a high-quality textbook.5

Ready to go beyond reviews and actually use the smartest AI tools in your daily workflow? Explore curated AI apps, prompts and automation bundles on our new marketplace at https://aiinnovationhub.shop/ — handpicked for creators, founders and students who want real productivity boosts, not just hype and buzzwords, draining their energy completely.

3. Phi-4-mini-flash-reasoning: The SambaY Hybrid Revolution

While the standard Phi-4 mini is an evolution of the Transformer, the Phi-4-mini-flash-reasoning variant represents a revolution. It introduces a hybrid architecture known as SambaY, which fundamentally alters how the model processes sequences and memory.9

3.1 The Bottleneck of Transformers

To appreciate SambaY, one must understand the limitations of the traditional Transformer. The attention mechanism in a Transformer has a computational complexity of $O(N^2)$ with respect to the sequence length $N$. As the prompt or context grows, the compute required to process it grows quadratically. This creates a “memory wall” and high latency for long-context tasks, making them sluggish on edge devices.

3.2 The SambaY Architecture: SSM + Attention

SambaY is a hybrid decoder-hybrid-decoder architecture. It combines the strengths of State Space Models (SSMs)—specifically the Mamba architecture—with traditional attention mechanisms.9

- SSMs (Mamba): State Space Models scale linearly $O(N)$ with sequence length. They function somewhat like Recurrent Neural Networks (RNNs) in that they maintain a compressed “state” of the history, allowing for incredibly fast inference and low memory usage. However, pure SSMs sometimes struggle with “associative recall”—referencing a specific, precise detail from far back in the context.

- Hybrid Design: SambaY uses a Self-Decoder based on Samba (an SSM variant) combined with Sliding Window Attention (SWA). This handles the bulk of the token processing with linear efficiency.

3.3 The Gated Memory Unit (GMU)

The critical innovation in SambaY is the Gated Memory Unit (GMU).9

- Function: The GMU serves as an intelligent bridge between the efficient SSM layers and the powerful attention layers. It selectively determines which information from the SSM’s hidden state needs to be “promoted” or retained for the attention mechanism to access.

- Selective Memory Sharing: Instead of every layer needing to access the entire history, the GMU curates the memory flow. This reduces the computational cost of the “Cross-Decoder” layers (which interleave attention and GMUs) while maintaining the model’s ability to perform deep reasoning over long contexts.

3.4 Differential Attention

Further enhancing the reasoning capability is Differential Attention.9

- Mechanism: Standard attention treats all dimensions of the embedding vector roughly equally. Differential Attention introduces learnable multipliers that reweight the attention scores channel-wise.

- Impact: This allows the model to “focus” more intensely on specific logical threads or semantic features, filtering out noise. This mechanism is credited with boosting the model’s performance on math benchmarks (like Math500 and AIME) without the need for computationally expensive Reinforcement Learning (RL) typically used to fine-tune reasoning.9

3.5 Performance Implications: The “Flash” Speed

The synergy of these components results in a model that is drastically faster than a pure Transformer equivalent.

- Throughput: SambaY achieves up to 10x higher decoding throughput on long-context tasks compared to traditional architectures.9

- Latency: It preserves linear prefilling time complexity. For a user, this means that pasting a 50-page document into the chat window results in near-instant readiness, rather than the “processing…” pause typical of standard LLMs.

- Efficiency: The reduced computational load directly translates to lower power consumption, a critical metric for battery-powered devices like laptops and tablets.

Phi-4 Model Architecture Comparison: Standard vs. Flash (SSM Hybrid)

The comparison highlights the move from the traditional quadratic self-attention mechanism to a hybrid architecture using **State Space Models (SSM)**, which offers dramatically increased throughput, especially for processing long context windows.

| Feature | Phi-4-mini-instruct | Phi-4-mini-flash-reasoning |

|---|---|---|

| Architecture | Dense Decoder-only Transformer | Hybrid SambaY (SSM + Attention) |

| Core Mechanism | Self-Attention (Quadratic $O(N^2)$) | Mamba SSM + Sliding Window Attention (Linear $O(N)$) |

| Key Innovation | Deep Reasoning Data Curriculum | Gated Memory Unit (GMU), Differential Attention |

| Context Length | 128,000 Tokens | 64,000 Tokens |

| Inference Speed | Standard | Flash (Up to 10x throughput on long context) |

| Primary Use Case | General Instruction, RAG, Chat | Complex Math, Logic, Real-time Agents |

If you enjoy exploring compact reasoning models like Microsoft Phi-4 mini, you’ll also want to see how Alibaba Cloud pushes end-to-end multimodality with Qwen2.5-Omni — text, images, audio and video in one stack: https://aiinovationhub.com/qwen2-5-omni-alibaba-cloud-ai-aiinnovationhub/ and compare two different philosophies of next-gen AI assistants for real users, developers and ambitious creators.

4. SLM vs. LLM: The Great Paradigm Shift

The release of Phi-4 mini is a defining moment in the broader industry shift from Large Language Models (LLMs) to Small Language Models (SLMs). This shift is not merely a diversification of product lines; it is a correction of the market's trajectory.

4.1 Challenging the Scaling Laws

For years, the "Scaling Laws" (Kaplan et al., 2020) dictated that performance was strictly a power-law function of parameter count, dataset size, and compute. The implication was that small models were inherently stupid. Phi-4 mini dismantles this assumption by demonstrating that the slope of the scaling law changes dramatically based on data quality.

- The Efficiency-Competency Frontier: Phi-4 mini (3.8B) outperforms models like Llama-2 (70B) and rivals Llama-3.1 (8B) and Mistral Large in specific reasoning domains. This suggests that a significant portion of an LLM's parameters are dedicated to memorizing "world knowledge" (trivia, dates, facts) rather than "intelligence" (reasoning, logic, syntax).

- Decoupled Intelligence: By offloading world knowledge to search engines or RAG systems, SLMs can dedicate their limited parameter budget exclusively to reasoning. This creates a modular AI architecture: a small, smart "brain" (SLM) accessing a massive "library" (Search/RAG).

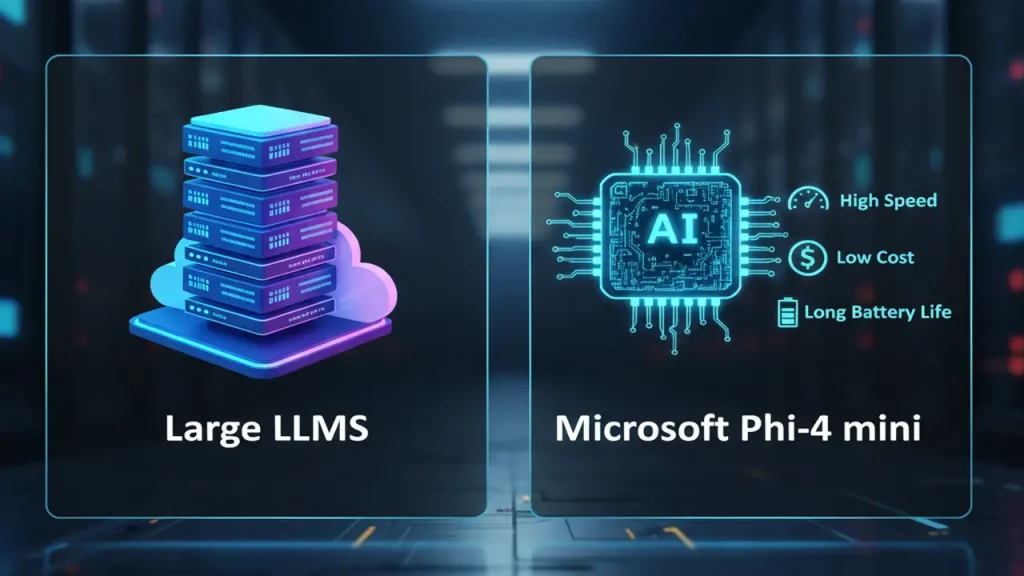

4.2 The Economics of Inference

The economic argument for SLMs is undeniable.

- Cloud Cost: Running a 70B parameter model requires enterprise-grade hardware (e.g., NVIDIA A100/H100 clusters) costing tens of thousands of dollars. A 3.8B model can be hosted on cheap, commodity GPUs or even CPU instances. Azure AI Foundry pricing for Phi-4-mini is roughly $0.000075 per 1,000 input tokens , a fraction of the cost of GPT-4 class models.

- Device Cost: For businesses, the ability to run models on existing employee laptops (On-Premises / On-Device) eliminates cloud inference bills entirely. The "Capex" (buying the laptop) is already sunk; the "Opex" (running the model) becomes electricity.

4.3 Energy Efficiency and Sustainability

As AI becomes ubiquitous, the energy footprint of massive datacenters has become a critical concern. LLMs are energy hogs. An SLM running on a specialized NPU consumes milliwatts of power per token, whereas a cloud-based LLM query can consume as much energy as charging a smartphone. Phi-4 mini, particularly the Flash Reasoning variant, is optimized for this low-power envelope, enabling "green AI" that scales without wrecking the grid.

5. On-Device AI for Copilot+ PCs

Microsoft's strategic vision for Windows centers on the Copilot+ PC—a new class of Windows machines defined by their hardware capability to run AI locally.

5.1 The Hardware Foundation: The NPU

The defining feature of a Copilot+ PC is the Neural Processing Unit (NPU).

- The Need for NPUs: While GPUs are excellent for parallel processing, they are often power-hungry and optimized for graphics pipelines. NPUs are domain-specific accelerators designed for the tensor operations (matrix multiplications) fundamental to AI. They are optimized for "inference per watt."

- Specs: The baseline requirement for a Copilot+ PC is an NPU capable of 40+ TOPS (Trillions of Operations Per Second). This standard is currently met by silicon such as the Qualcomm Snapdragon X Elite, Intel Core Ultra (Lunar Lake), and AMD Ryzen AI 300 series.

- Phi-4 Optimization: Phi-4 mini is explicitly tuned to run on these architectures. Microsoft provides quantized versions (INT4) that fit within the memory bandwidth constraints of these chips (typically LPDDR5x) while maximizing the TOPS utilization.

5.2 The Privacy Advantage

Running Phi-4 mini locally on a Copilot+ PC addresses the single biggest barrier to enterprise AI adoption: Privacy.

- Local Processing: When a user asks Copilot to "summarize this confidential legal document" or "rewrite this internal email," the data never leaves the device. There is no API call to the cloud, no data transit, and no risk of leakage.

- GDPR/Compliance: For industries like healthcare and finance, this local processing capability is a game-changer, allowing them to deploy AI tools that are compliant with strict data residency regulations by default.

5.3 Latency and Responsiveness

Local AI eliminates network latency. Features powered by Phi-4 mini—such as real-time grammar correction, live translation, or "Click to Do" context actions—feel instantaneous. The user interface remains fluid because the inference happens at the speed of the local bus, not the speed of the internet connection.

6. Windows Copilot+ Local AI: The Phi Silica Connection

The integration of Phi-4 mini into Windows is not an isolated event but the continuation of a roadmap started with Phi Silica.

6.1 The Legacy of Phi Silica

Phi Silica was the first SLM purpose-built for the NPU.

- Origin: Derived from Phi-3-mini, Silica was a 3.3 billion parameter model heavily optimized for the Snapdragon NPU instruction set.

- Role: It powered the initial wave of "AI experiences" in Windows 11, such as the controversial but innovative "Recall" feature (which creates a semantic timeline of user activity). Silica proved that a 3B model could run persistently in the background without draining the battery.

6.2 The Transition to Phi-4: The Upgrade Path

Phi-4 mini and Phi-4-mini-reasoning serve as the direct successors to Silica.

- Capability Boost: While Silica was capable of summarization and simple rewriting, Phi-4 brings reasoning. This means the OS can now understand intent and complex logic. Instead of just "summarize this text," the OS can "analyze the sentiment of this text and suggest a diplomatic response based on the previous email chain."

- Deployment: Microsoft is rolling out Phi-4-reasoning models optimized via ONNX (Open Neural Network Exchange) to Copilot+ PCs. This ensures that developers can target a standard API (DirectML) and have the model run efficiently regardless of whether the underlying NPU is from Intel, AMD, or Qualcomm.

6.3 The "Hybrid Loop"

The ultimate vision is the Hybrid Loop.

- Mechanism: The operating system dynamically routes tasks. Simple, high-frequency, or privacy-sensitive tasks are routed to the local Phi-4 mini on the NPU. Complex, knowledge-heavy queries are routed to the cloud (GPT-4o).

- Developer Experience: For developers, this abstraction is handled by the Windows Copilot Runtime. They simply request an inference, and the system decides the most efficient execution path.

7. Edge AI Reasoning Model: IoT and Enterprise

The utility of Phi-4 mini extends far beyond the laptop form factor. Its high reasoning-to-size ratio makes it the ideal "brain" for the Internet of Things (IoT) and the intelligent edge.

7.1 Mobile and Embedded Ecosystems

- MediaTek: The mobile chip giant has optimized Phi-4 mini for its Dimensity 9400 platform.

- Performance: On a high-end smartphone chip, Phi-4 mini achieves a prefill speed of over 800 tokens per second and a decode speed of 21 tokens per second. This is faster than human reading speed, enabling real-time conversational agents on a phone without an internet connection.

- Implication: This brings "Agentic AI" to mobile. A phone could autonomously navigate complex apps, book appointments, or sort photos based on abstract criteria ("find photos of my dog looking sad") using local reasoning.

7.2 Robotics and Industrial IoT

- NVIDIA Jetson: In the robotics world, the NVIDIA Jetson Orin series is the standard. While a Raspberry Pi 5 struggles with memory bandwidth (approx. 9 GB/s, limiting it to ~1 token/sec for 3B models), the Jetson Orin Nano (with GPU acceleration) can run Phi-4 mini effectively.

- Use Cases:

- Autonomous Navigation: A robot can use Phi-4 mini to interpret natural language commands ("Go to the kitchen and find the red cup") and decompose them into actionable planning steps (Chain of Thought).

- Predictive Maintenance: On an industrial factory floor, an edge gateway running Phi-4 mini can analyze complex sensor logs. Instead of simple threshold alerting, the model can reason about the correlation between vibration spikes and temperature drops to diagnose specific bearing failures before they happen.

7.3 The Raspberry Pi Frontier

While the Raspberry Pi 5 is not a powerhouse, the release of highly quantized (GGUF/llama.cpp) versions of Phi-4 mini makes it accessible to the maker community. Running at 1-2 tokens per second is too slow for chat, but sufficient for background tasks like home automation logic ("If the temperature is rising and no one is home, close the blinds") or non-interactive data summarization.

8. Phi-4 Mini vs. Large LLMs: A Comparative Analysis

The most disruptive aspect of Phi-4 mini is its competitive positioning against models that are exponentially larger.

8.1 Comparison with Llama 3.2 (3B)

Meta's Llama 3.2 3B is the direct competitor in the "slm" weight class.

- Reasoning Gap: Benchmark data reveals a significant gap. On MATH-500, Phi-4 mini (base) scores 71.8 and the reasoning variant scores 94.6. In contrast, Llama 3.2 3B Instruct scores roughly 44.4.

- Analysis: This disparity highlights the difference in training data. Llama is trained as a general-purpose model; Phi-4 is trained as a reasoning engine. For tasks involving logic, math, or code, Phi-4 mini is in a different league.

8.2 Comparison with GPT-4o-mini

OpenAI's GPT-4o-mini is a distilled, efficient model, but it is primarily a cloud model.

- Performance: Phi-4-reasoning demonstrates superior performance to GPT-4o-mini on STEM-related QA benchmarks.

- The Edge Factor: While GPT-4o-mini might have a broader world knowledge base, Phi-4 mini wins on latency and privacy when deployed locally. For a developer building a local coding assistant, Phi-4 mini offers a better trade-off.

8.3 Comparison with 70B+ Models (Llama 3.1, Qwen 2.5)

It sounds improbable, but in specific reasoning domains, Phi-4 (and to a lesser extent, the mini) challenges the 70B giants.

- The Evidence: Phi-4 (14B) outperforms Llama 3.3 (70B) on 6 out of 13 math/reasoning benchmarks. While the mini (3.8B) does not beat the 70B models across the board, the fact that it is even comparable on metrics like AIME (solving ~33-52% of problems) is a testament to the efficiency of the architecture. A 3.8B model running on a laptop doing work that previously required a server farm is a paradigm shift.

9. Benchmarks: Quantifying the Intelligence

To empirically validate the "Reasoning" claim, we must examine the "hard" benchmarks—those designed to break simple pattern-matching models.

9.1 The Benchmark Suite

- AIME (American Invitational Mathematics Examination): High-school competition math requiring creative, multi-step problem solving.

- MATH / MATH-500: A collection of challenging math problems (algebra, calculus, probability).

- GPQA (Graduate-Level Google-Proof Q&A): PhD-level science questions designed to be unanswerable via simple search.

9.2 Phi-4-mini-flash-reasoning Results

SLM Advanced Reasoning Benchmarks (3.8B Parameter Model)

These scores demonstrate the effectiveness of targeted training and data efficiency, enabling a Small Language Model (SLM) to achieve performance levels in competitive mathematics and complex science (GPQA) that rival models 10 to 100 times its size.

| Benchmark | Score | Interpretation |

|---|---|---|

| Math500 | 92.45% | Near mastery of standard mathematical concepts. The model makes almost no calculation or logic errors on standard problems. [Image of common mathematical functions and formulas] |

| AIME 2024 | 52.29% | This is the **standout metric**. Solving over half of AIME problems places this 3.8B model in the tier of "**Frontier Intelligence**." Most models under 10B parameters score near 0-10% here. |

| AIME 2025 | 33.59% | Performance on the most recent (unseen) test is lower but still remarkably high, proving the model hasn't just memorized older test sets. |

| GPQA Diamond | 45.08% | Answering nearly half of **PhD-level science questions** correctly. This indicates deep semantic understanding of complex scientific domains. [Image of complex scientific reasoning diagram] |

9.3 Strategic Implications of Scores

These scores are not just numbers; they are proof of concept for Chain of Thought (CoT) in small models. The high AIME scores indicate that Phi-4 mini can effectively "think" through a problem, breaking it down into intermediate steps, rather than trying to intuit the answer in one go. This capability, previously thought to emerge only at huge scales (the "emergence" hypothesis), is now proven to be distillable.

10. Conclusion: The Future of SLMs

The release of Microsoft Phi-4 mini in 2025 marks the end of the "Bigger is Better" era and the beginning of the "Smarter is Better" era.

10.1 Key Takeaways

- Reasoning is Distillable: Microsoft has proven that high-level reasoning capabilities can be compressed into a 3.8B parameter container through the use of high-quality synthetic data and teacher-student training.

- Architecture Re-emerges: The dominance of the vanilla Transformer is cracking. The SambaY architecture in Phi-4-mini-flash-reasoning demonstrates that hybrid designs (SSM + Attention) are necessary to break the memory wall and enable efficient long-context reasoning on the edge.

- The Edge is Intelligent: With Copilot+ PCs and NPUs, the hardware is finally ready to support meaningful local AI. Phi-4 mini is the software engine that unlocks this hardware potential, enabling privacy-preserving, zero-latency applications.

10.2 Future Outlook

Looking ahead, we can expect the "Flash Reasoning" techniques (SambaY, Differential Attention) to be adopted across the industry. The distinction between "SLM" and "LLM" will become less about capability and more about deployment target. We are moving toward a world of ubiquitous intelligence, where every device—from your thermostat to your laptop—possesses a reasoning engine capable of understanding complex intent. Microsoft Phi-4 mini is the pioneer of this new reality.

Microsoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 mini

Microsoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 mini

Microsoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 mini

Microsoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 miniMicrosoft Phi-4 mini

Related

Discover more from AI Innovation Hub

Subscribe to get the latest posts sent to your email.