AI industry disruptions: The main changes in the industry are simple and practical

AI Scheduling for Manufacturing: Boost Shifts Fast

AI scheduling for manufacturing that optimizes shifts, runs what-if for idle lines, and syncs with ERP. Cut costs and boost throughput.

7-day AI course — build your first pilot

7-day AI course for beginners: a simple and practical program. In one week – from zero to a pilot AI project: no-code, LLM basics.

7 Shocking AI Red Teaming Lessons

on-device AI 2025 offline: Windows Copilot+, Apple Intelligence, MDM-borders and offline-advertising. What really works.

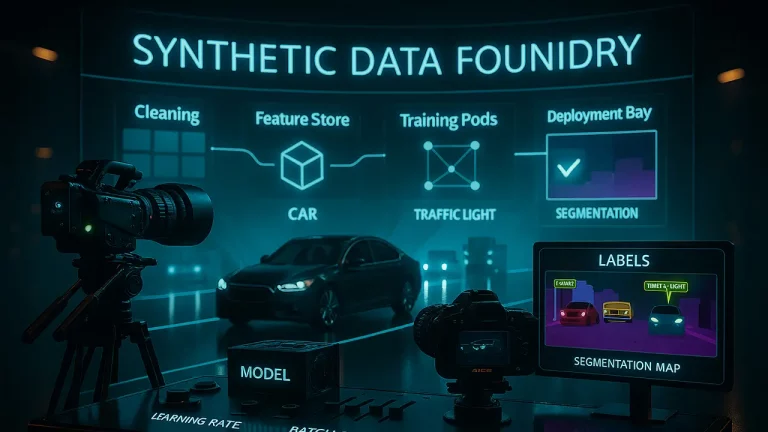

Top 10 Synthetic Data Engines

Synthetic data engines for logistics & fintech: see accuracy uplift, class balance fixes, privacy gains, and cost cuts.

7 Shocking AI Red Teaming Lessons

AI red teaming: 7 shocking lessons on AI safety evals, deceptive AI behavior, and WEF/OpenAI guidance. Practical checklist inside.

HBM4 memory: 7 shocking facts

HBM4 memory is redefining AI bandwidth: HBM4 vs HBM3E, 2048-bit interface, vendors SK hynix/Micron/Samsung, supply risks in 2025–2026.

7 Killer: Llama 4 on Bedrock

Llama 4 on AWS Bedrock in production: open-weight models (Scout, Maverick), pricing, context, enterprise rollout — 7 killer insights.

10 Powerful Ways

AI Builds AI

AI builds AI goes mainstream: AutoML, NAS, AI agents, HPO, synthetic data cut costs and time-to-market. See our 2025 playbook.

EU AI Act 2025: Bold Rules + €1B aiinnovationhub.com

EU AI Act 2025: timeline, GPAI and high-risk requirements; EU “Apply AI” (€1B), America’s AI Action Plan and strict California rules.

Open-Weights LLM for Small Business: 7 Proven Wins

open-weights LLM for small business – licenses Llama/Qwen/Mistral, VPS or Bedrock, cost per 1000 tokens.

AI inference cost 2025: shocking drop by 280

- faster, cheaper, more compact

The GPT-3.5 impedance is about 280 times cheaper, and the small and local models catch up with the closed ones

AI digital doubles copyright:

what is possible that is not possible in 2025

AI digital dual copyright in Hollywood: Sora 2, image rights, licenses and deepfake-laws.

Hands-on C2PA Content Credentials:

add metadata

Practical guide to C2PA Content Credentials: metadata, YouTube/TikTok verification, SynthID, restrictions and bypasses.

Introduction: Why the AI industry is now in the spotlight

Artificial intelligence (AI) is one of the most dynamically developing technologies today, which is already transforming business, science, medicine and everyday life. But with the rapid growth of opportunities come challenges – new regulations, ethical dilemmas, technological constraints, and market changes. In this article, we will examine in detail the key AI industry disruptions that are shaping new realities and require business and regulators to adapt.

AI Industry Disruptions: Navigating the Tectonic Shifts Shaping Our Tomorrow

Welcome to your central hub for understanding the forces reshaping the world. AI industry disruptions are no longer a future possibility; they are the present reality. This isn’t about incremental improvements; it’s about foundational shifts in technology, policy, and markets that are redrawing the global economic map. We cut through the hype to deliver clear, concise, and actionable insights on the most critical developments. From the halls of government to the server farms powering the next big model, we track the signals that matter.

This comprehensive analysis breaks down the core areas of disruption, providing a clear-eyed view of the landscape. We’ll explore the tightening grip of regulation, the blistering pace of technical innovation, the geopolitical battle for hardware supremacy, and the volatile flows of capital and talent. Our goal is simple: to equip you with the knowledge needed to not just survive, but thrive, in this new era.

Part 1: The Regulatory Reckoning: AI Policy Changes and Governance

The Wild West era of AI is coming to a rapid close. As the technology’s power and potential for harm have become undeniable, governments worldwide are scrambling to erect guardrails. These AI regulation updates represent one of the most significant AI industry disruptions, creating both compliance challenges and strategic opportunities.

The EU AI Act: The World’s First Comprehensive Framework

The European Union has fired the starting gun with its landmark AI Act, establishing a risk-based regulatory framework. This is a monumental AI policy change that will have a global impact, much like the GDPR did for data privacy.

- The Risk-Based Pyramid: The Act categorizes AI systems into four levels of risk:

- Unacceptable Risk: Systems deemed a clear threat to safety, livelihoods, and rights are banned. This includes social scoring by governments and real-time remote biometric identification in public spaces (with limited exceptions).

- High-Risk: This is the most impactful category for enterprises. It includes AI used in critical infrastructure, medical devices, education, employment, and essential private and public services. These systems face stringent requirements for risk assessments, high-quality data sets, human oversight, and robustness.

- Limited Risk: Systems like chatbots have transparency obligations—users must know they are interacting with an AI.

- Minimal Risk: The vast majority of AI applications, like spam filters, are largely unregulated.

- Focus on General-Purpose AI (GPAI): The Act specifically targets powerful foundation models and LLMs. Providers of these models will face specific transparency requirements, including detailed technical documentation and disclosure of training data summaries. The most powerful models with “systemic risk” will be subject to even closer scrutiny, including mandatory model evaluations and adversarial testing.

- Business Impact: For any company operating in or selling to the EU, compliance is non-negotiable. This means building “regulation-by-design” into AI development pipelines, investing in robust documentation, and establishing clear AI ethics governance protocols. The cost of non-compliance is steep, with fines reaching up to €35 million or 7% of global annual turnover.

The Global Patchwork: US, China, and Beyond

While the EU is leading, other major powers are crafting their own responses, creating a complex global patchwork.

- United States: The US approach is more fragmented, relying on a combination of executive orders and agency-specific guidance. President Biden’s Executive Order on AI is a powerful directive focusing on safety, security, and equity. It mandates that developers of the most powerful AI models share their safety test results with the government, develops standards for authenticating official content to combat deepfakes, and addresses algorithmic discrimination. The AI policy changes here are less about a single law and more about a whole-of-government alignment.

- China: China has been proactive in regulating AI, with a focus on algorithmic recommendation systems, generative AI, and a strong emphasis on “core socialist values.” Its regulations require security assessments and anti-discrimination measures for public-facing AI. For global businesses, this means navigating three very different regulatory philosophies: the EU’s rights-based approach, the US’s security-and-innovation-focused model, and China’s state-controlled model.

The Bottom Line for Business: The era of “move fast and break things” is over. Proactive AI ethics governance is no longer a PR exercise but a core business function. Companies must establish cross-functional teams (legal, technical, ethics) to monitor these AI regulation updates, conduct internal audits, and embed compliance into their AI lifecycle. The disruption here is a forced maturation of the entire industry.

Part 2: The Engine Room: LLM Breakthroughs and the GenAI Market Shifts

If regulation is the brake, then innovation in Large Language Models is the accelerator. The pace of LLM breakthroughs is breathtaking, driving constant GenAI market shifts and redefining what is possible.

The Race to Multimodality and Agentic Systems

The initial wave of LLMs was text-in, text-out. The current disruption is about models that can seamlessly understand, process, and generate multiple types of data—text, images, audio, and video—simultaneously.

- Beyond Text: Models like GPT-4V (Vision), Gemini, and Claude 3 are inherently multimodal. They can analyze a photograph, a spreadsheet, and a paragraph of text in a single query. This breaks down silos and enables far more complex and useful applications, from a customer service agent that can “see” a product issue to a research assistant that can parse scientific papers and charts.

- The Rise of AI Agents: The next frontier is moving from passive tools to active agents. These are AI systems that can perform complex, multi-step tasks autonomously. Instead of just writing an email, an agent could research a topic, draft a report, create accompanying slides, and schedule a meeting to present them. This shift from assistance to action represents a fundamental GenAI market shift, moving up the value chain from content creation to workflow automation.

The Scramble for Efficiency: Smaller, Faster, Cheaper

The obsession with model size (parameter count) is giving way to a focus on efficiency. The astronomical cost of training and running trillion-parameter models is unsustainable for most applications. This is driving several key LLM breakthroughs:

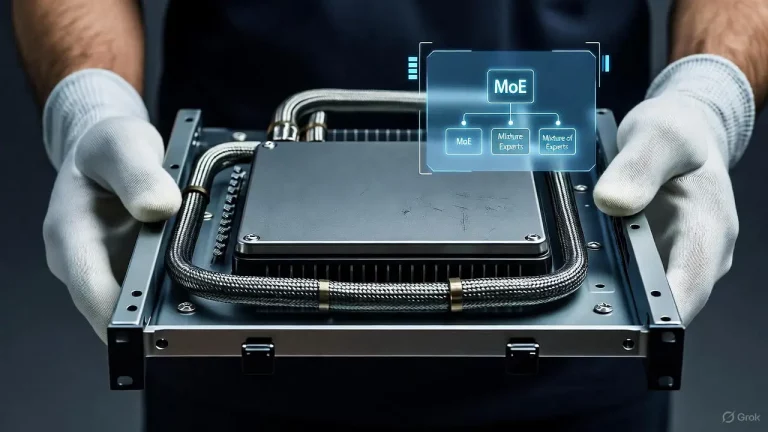

- Mixture-of-Experts (MoE): Models like Mixtral 8x22B use a MoE architecture, where only a fraction of the total parameters are activated for a given task. This makes them vastly more efficient to run while maintaining performance close to that of much larger models, dramatically reducing inference costs.

- Open Source vs. Closed Source: The open-source community, led by Meta’s Llama series and a plethora of fine-tuned variants, is putting immense pressure on closed-source giants like OpenAI and Google. These open-source models are “good enough” for a vast number of enterprise use cases and offer greater control, customizability, and data privacy. This is a major GenAI market shift, democratizing access to powerful AI and forcing proprietary players to continuously innovate just to stay ahead.

- Specialized Models: The one-model-fits-all approach is being challenged by a new wave of specialized models fine-tuned for specific domains like law, medicine, or coding. These models often outperform general-purpose models on their specific tasks at a fraction of the cost and size.

The Bottom Line for Business: The “best” model is no longer a simple choice. The strategy must be multi-faceted. Use powerful, expensive closed-source models for cutting-edge, high-stakes tasks. Leverage efficient open-source or specialized models for high-volume, routine, or sensitive applications. The disruption here is the end of model hegemony and the beginning of a strategic, portfolio-based approach to AI procurement and development.

Part 3: The Physical Foundation: AI Chip Supply and Geopolitics

Every AI model, no matter how sophisticated, runs on physical hardware. The battle for control over the AI chip supply is perhaps the most critical and precarious of all AI industry disruptions. It’s where technology, economics, and geopolitics violently collide.

The NVIDIA Monopoly and the Challengers

NVIDIA’s GPUs, particularly its H100 and next-generation Blackwell platforms, are the undisputed gold standard for training and running large-scale AI models. The company has achieved a near-monopoly, commanding an estimated 80-90% of the market.

- The Software Moat: NVIDIA’s dominance isn’t just about hardware; it’s about CUDA, the software platform and ecosystem that developers are deeply locked into. Recreating this software stack is a monumental challenge for competitors.

- The Rise of Challengers: The market’s dependence on a single supplier is a massive risk, spurring intense competition.

- AMD: With its MI300 series Instinct accelerators, AMD is the most direct competitor, offering compelling performance and aggressively pushing its ROCm software stack as a CUDA alternative.

- Custom Silicon (ASICs): The largest tech giants are not waiting. Google has its Tensor Processing Units (TPUs), Amazon has Trainium and Inferentia chips, and Microsoft is reportedly developing its own AI silicon. This vertical integration is a key strategy to control costs, ensure supply, and optimize for their specific workloads.

- Startups: Companies like Cerebras and SambaNova are building radically different architectures designed specifically for AI, offering massive performance gains for certain types of models.

Geopolitics and the Choke Points

The AI chip supply chain is a global web with critical choke points, and it has become a primary battlefield in the US-China tech war.

- TSMC’s Central Role: Taiwan Semiconductor Manufacturing Company (TSMC) fabricates over 90% of the world’s most advanced chips, including those for NVIDIA, AMD, and Apple. This creates a massive geopolitical and logistical risk, making the entire AI industry vulnerable to regional instability.

- Export Controls: The US has implemented sweeping export controls aimed at curtailing China’s access to advanced AI chips and the equipment to make them. This has forced Chinese tech giants like Alibaba and Baidu to rely on older, less powerful chips and accelerate their own domestic chip development efforts through companies like SMIC.

The Bottom Line for Business: Dependency on a single vendor or a geopolitically fraught supply chain is a critical business risk. Companies must:

- Diversify Hardware: Explore alternatives from AMD and cloud-specific ASICs (like AWS Inferentia) for inference workloads to reduce costs and dependency.

- Factor in Geopolitics: Any long-term AI strategy must have contingency plans for supply chain disruptions. This could mean locking in long-term supply contracts, over-provisioning cloud credits, or designing software to be hardware-agnostic.

- Focus on Inference Efficiency: With training dominated by a few players, the bigger market opportunity is in efficient inference. Optimizing models to run cheaper and faster on available hardware is a key competitive advantage.

Part 4: The Money Flow: AI Funding Trends, M&A Deals, and Talent Wars

Capital is the fuel for innovation, and its flow is a perfect barometer of market confidence. The current landscape of AI funding trends and AI M&A deals reveals a market in a state of both exuberance and correction.

From Frenzy to Focus: The Evolution of AI Funding

The launch of ChatGPT triggered a venture capital gold rush into generative AI. However, the initial frenzy is now evolving into a more mature, focused phase.

- The “Foundational Model” Bubble: Billions were poured into a small number of companies (OpenAI, Anthropic, Cohere) aiming to build the next great LLM. The astronomical costs and intense competition in this layer have made it a “game of giants,” leading to a concentration of capital.

- Shift to Application and Infrastructure Layers: Savvy investors are now looking downstream. The most promising AI funding trends are in:

- Applications: Startups building specific, defensible products for verticals like legal tech, healthcare, and marketing. These companies solve real business problems and have clearer paths to revenue.

- Infrastructure & Tooling: There is massive demand for the “picks and shovels” of the AI gold rush. This includes everything from vector databases (Pinecone) and MLops platforms (Weights & Biases) to fine-tuning and evaluation tools. These companies are building the essential plumbing for the AI ecosystem.

AI M&A Deks: The Consolidation Engine

While some startups will go public, many more will be acquired. AI M&A deals are a primary mechanism for large tech companies to acquire talent, technology, and market share quickly.

- Acqui-hires (Talent Acquisitions): In a tight talent market, a primary driver of M&A is the “acqui-hire,” where a company is bought primarily for its engineering and research team. These deals are often a lifeline for startups struggling to find a business model but blessed with top-tier talent.

- Strategic Technology Plays: Larger companies are snapping up startups that fill a critical gap in their AI stack. For example, a cloud provider might acquire a model monitoring company to bolster its AI platform offerings. We expect this trend to accelerate as the market matures and the winners in the application layer become clear.

AI Layoffs and Hiring: The Great Realignment

Paradoxically, the industry is experiencing both massive hiring in AI-specific roles and significant layoffs in other departments.

- The Talent Crunch: There is an intense war for top AI researchers, ML engineers, and prompt engineers. Salaries for these roles are skyrocketing, creating a two-tiered system within tech.

- Efficiency-Driven Layoffs: Concurrently, many large tech companies have announced layoffs, often in non-core or administrative functions. This reflects a strategic pivot: they are cutting costs in some areas to free up capital to invest heavily in their AI futures.

The Bottom Line for Business:

- For Investors: The easy money in foundational models has been made. The next wave of outsized returns will come from identifying the winning applications and infrastructure tools that demonstrate real customer value and efficient unit economics.

- For Companies: To attract and retain top AI talent, you need more than just money. A compelling mission, access to cutting-edge problems, and a strong AI culture are key. Furthermore, keep a close eye on the M&A landscape; the startup you are partnering with today could be part of a competitor tomorrow.

Part 5: The End User: Enterprise AI Adoption and the Productivity Paradox

All these disruptions—regulation, innovation, hardware, and capital—converge at a single point: the enterprise. Enterprise AI adoption is the ultimate test and the largest market opportunity.

Moving from Pilot to Production

The biggest challenge for most companies is moving from experimental proofs-of-concept (POCs) to scalable, production-grade systems. The “productivity paradox” looms: massive investment with unclear, measurable returns.

- The POC Graveyard: Thousands of AI pilots are stuck in a limbo, unable to deliver reliable value at scale. The reasons are often not technical but organizational: lack of clear use cases, poor data quality, and resistance to change.

- **The Rise of the AI Center of Excellence (CoE): Successful companies are establishing centralized CoEs. These teams set standards, provide tools, share best practices, and help business units navigate the complex landscape of AI industry disruptions.

Strategic Imperatives for Successful Adoption

To overcome the paradox, enterprises must be strategic.

- Start with the Problem, Not the Technology: The most successful AI projects are driven by a clear business need—automating a costly manual process, personalizing customer interactions, or accelerating R&D.

- Data is Still King: An AI model is only as good as the data it’s trained on. Investing in data governance, quality, and accessible data platforms is a prerequisite.

- Focus on Augmentation, Not Just Automation: The most powerful applications of AI augment human intelligence, not just replace it. A legal AI that helps a lawyer review contracts 10x faster is a win; one that makes error-prone judgments is a disaster.

- Implement Robust LLM Governance: With Enterprise AI adoption of LLMs, new risks emerge: data leakage, hallucinations, intellectual property issues, and cost overruns. Companies need clear policies on which models to use for which tasks, how to manage prompts, and how to monitor outputs.

The Bottom Line for Business: The disruption here is internal. Adopting AI successfully requires a cultural and operational transformation. It demands leadership buy-in, upskilling of the workforce, and a willingness to experiment and iterate. The companies that succeed will be those that treat AI not as a standalone project, but as a core competency woven into the fabric of their operations.

Conclusion: Synthesizing the Disruptions for a Clear Path Forward

The AI industry disruptions we’ve outlined are not isolated events. They are interconnected forces creating a vortex of change. A new LLM breakthrough in China is scrutinized by regulators in the EU. A bottleneck in the AI chip supply can stall Enterprise AI adoption worldwide. A major AI M&A deal can instantly reshape a competitive landscape.

Navigating this requires a holistic view. You cannot focus on technology while ignoring policy. You cannot chase funding without a viable hardware strategy. The businesses that will lead the next decade are those that can build a cohesive strategy that acknowledges and adapts to all these vectors of change simultaneously.

The message is clear: the age of AI is here, and it is messy, complex, and unforgiving. But for those who take the time to understand these disruptions, to “filter out the noise and keep the essence,” the opportunities are unparalleled. Stay informed, stay agile, and build for a future that is being rewritten, in real-time, by artificial intelligence.

Bookmark this page and check back regularly. We are constantly updating our analyses to reflect the latest AI industry disruptions.

www.aiinnovationhub.shop (review of AI-tools for business): «It is convenient to compare the compliance figures of services in one place – fewer surprises before release».

Mini review about www.aiinnovationhub.com: «Here quickly explain the complex – save time before meetings».