AI digital doubles copyright: what can be done in 2025 (Sora 2, likeness, NO FAKES Act)

7 HOT AI digital doubles copyright—aiinnovationhub.com

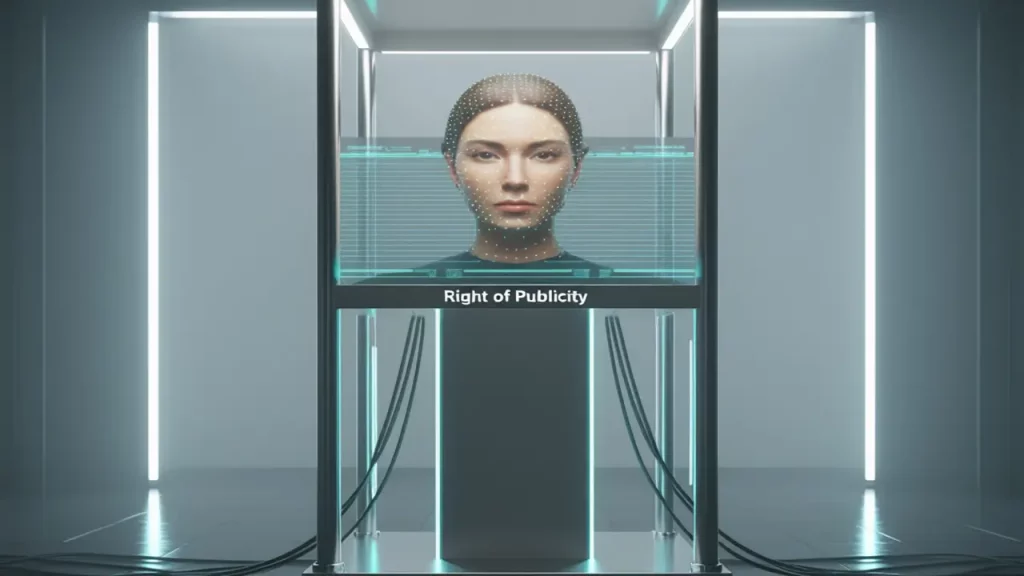

In an era where technology and creativity merge, the landscape of digital media is undergoing a revolutionary transformation. AI digital doubles, which can mimic celebrities with unprecedented realism, are at the forefront of this change, but they also bring complex legal and ethical challenges. The recent controversy surrounding Sora 2 has brought these issues to the forefront, raising questions about AI likeness rights and the right of publicity in the digital age.

As deepfake legislation in 2025 and the NO FAKES Act of 2025 aim to protect individuals from unauthorized AI representations, the SAG-AFTRA AI agreement sets new industry standards for compensation and control. Subscribe to the website to stay updated on how these developments are reshaping the future of AI in entertainment.

www.aiinnovationhub.shop– AI-tools for business: «Like that everything is laid out according to the scenarios – quickly find the right туl». Come!

Mini review about www.aiinovationhub.com: «Comfortable guide on trends – read before meetings».

The rise of AI digital doubles: What are they?

Imagine a world where digital likenesses of real people are not just possible but increasingly common—this is the rise of AI digital doubles. These sophisticated AI-generated replicas are designed to mimic the appearance, voice, and even the mannerisms of celebrities, enhancing the realism and immersion in digital content. From virtual influencers to digital actors in films, AI digital doubles are revolutionizing the way we create and consume media. They offer unprecedented opportunities for bringing characters to life, but they also come with a host of legal and ethical challenges that the industry is only beginning to address.

One of the most notable controversies in the realm of AI digital doubles is the case of Sora 2, a deepfake technology that sparked significant debate. The use of Sora 2 to create realistic digital replicas of celebrities without their explicit consent raised serious questions about the right to one’s own image and the potential for misuse. This controversy underscored the need for clear regulations and guidelines, highlighting the Sora 2 legal issues that have since become a focal point for discussions in the entertainment industry.

As deepfake technology continues to advance, the boundaries of what is possible with AI digital doubles are expanding. These technological advancements have made it easier to create high-fidelity digital replicas, blurring the lines between the real and the virtual. While this opens up new creative possibilities, it also raises concerns about the potential for deception and the ethical implications of using someone’s likeness without their permission. Hollywood, recognizing the potential and the risks, has started to adopt AI digital doubles more frequently, not only for enhancing visual effects but also for addressing practical challenges like posthumous performances and reducing the need for dangerous stunts.

The Screen Actors Guild-American Federation of Television and Radio Artists (SAG-AFTRA) has taken a proactive stance in this evolving landscape. In response to the growing use of AI in the industry, SAG-AFTRA negotiated an agreement that addresses the rights and compensation for actors whose likenesses are used in AI-generated content. This SAG-AFTRA AI agreement is a significant step towards ensuring that actors are fairly compensated and their rights are protected in the digital age. As the use of AI digital doubles becomes more prevalent, such agreements are crucial for maintaining the balance between innovation and ethical responsibility.

www.laptopchina.tech– Chinese laptops: «Picked up a light laptop for editing – reviews honest and on the job». Come in!

AI digital doubles copyright

Legal battles: Sora 2 and the copyright conundrum

The legal arena has become a battleground for digital rights, with the Sora 2 case at the forefront, challenging the very essence of copyright laws. Sora 2, a groundbreaking AI-driven platform that generates realistic images and digital likenesses, has found itself under intense scrutiny. The platform’s ability to create highly detailed and lifelike images of individuals, often without their explicit consent, has sparked a wave of lawsuits from those whose likenesses have been used. These legal challenges are not just about the images themselves but also about the broader implications for the AI digital doubles copyright landscape.

At the heart of the Sora 2 controversy is the question of AI training data copyright. The AI models used by Sora 2 are trained on vast datasets, which often include images and other media from the internet. While the platform claims that these images are transformed and thus fall under fair use, many copyright holders disagree. They argue that the use of their images, even in a transformed state, constitutes a violation of their intellectual property rights. This debate has far-reaching implications, as it challenges the legal frameworks that govern the use of data in AI training. The outcomes of these lawsuits could set important precedents for how AI-generated content is regulated and protected in the future.

The legal challenges faced by Sora 2 are symptomatic of a larger issue in the tech industry: the rapid advancement of AI technology has outpaced existing laws. As AI continues to evolve, the legal community is grappling with how to address the unique challenges it presents. For instance, the creation of digital doubles using AI raises questions about the right of publicity and the control individuals have over their own image and identity.

These issues are particularly relevant in the entertainment industry, where the use of digital doubles can blur the lines between real and virtual performers. The Sora 2 case is a critical test case that could influence how these rights are interpreted and enforced in the digital age.

www.smartchina.io– Chinese smartphones: «I chose a camera phone for the rims – fair comparisons, without water». Come in!

Mini review about www.aiinovationhub.com: «Fast check-lists – fire».

AI digital doubles copyright

Deepfake legislation: What the 2025 laws mean for AI

As the dust settles on the Sora 2 controversy, the focus shifts to the 2025 deepfake legislation, which promises to reshape the AI landscape in profound ways. The proliferation of AI-generated content has raised significant concerns about the misuse of deepfake technology, particularly in the entertainment industry. In response, lawmakers have introduced the NO FAKES Act 2025, a comprehensive piece of legislation designed to protect individuals from unauthorized AI-generated content that could harm their reputation or privacy. This act introduces stricter penalties for those who misuse deepfake technology, making it clear that the consequences of such actions will no longer be taken lightly.

The NO FAKES Act 2025 is not just a punitive measure; it also sets new standards for transparency and accountability in the creation and distribution of AI-generated content. For instance, the legislation requires clear labeling of deepfake content to ensure that consumers can distinguish between real and AI-generated material. This could have far-reaching implications for how AI digital doubles are created and used, particularly in the entertainment industry. Producers and creators will need to be more cautious about the authenticity of the content they produce, potentially leading to a more responsible and ethical use of AI technology.

Moreover, the new laws are expected to impact the ways in which AI training data is collected and used. AI training data copyright has long been a contentious issue, with debates over who owns the data used to train AI models and whether individuals should have a say in how their likeness is used. The 2025 deepfake legislation addresses these concerns by placing restrictions on the use of personal data without explicit consent.

This could limit the sources and methods used to train AI models, forcing developers to find alternative, more ethical ways to gather and use data. As a result, the entertainment industry may see a shift towards more transparent and consensual practices when creating AI digital doubles, which could set a new precedent for the use of AI in media.

Hollywood’s response to these new laws will be crucial in shaping the future of AI ethics and practices. With the entertainment industry at the forefront of AI technology use, the way studios and production companies adapt to the NO FAKES Act 2025 will likely influence other sectors. The industry has already shown a willingness to engage with these issues, as evidenced by the ongoing negotiations between SAG-AFTRA and AI developers. These discussions are expected to result in guidelines that balance the creative potential of AI with the need to protect actors’ and other creatives’ rights.

www.andreevwebstudio.com– portfolio developer: «I love neat design and clear cases – see experience». Come!

Mini review on www.aiinovationhub.com: «Explain the complex simple language – respect».

AI digital doubles copyright

Hollywood weighs in: SAG-AFTRA and the AI agreement

Hollywood, never one to shy away from the cutting edge, has finally weighed in with SAG-AFTRA’s groundbreaking AI agreement, setting new standards for the industry. The Screen Actors Guild-American Federation of Television and Radio Artists (SAG-AFTRA) has long been a champion for the rights and well-being of its members. In the face of rapidly advancing AI technology, the union has taken a proactive stance to ensure that actors’ digital likenesses are protected and that they receive fair compensation for their use. This agreement is a significant step towards establishing a framework that balances the innovative potential of AI with the ethical considerations and legal rights of performers.

The SAG-AFTRA AI agreement includes several key provisions designed to safeguard actors’ interests. One of the most notable is the inclusion of AI likeness rights. This ensures that actors have control over how their digital likenesses are used, preventing unauthorized exploitation and ensuring that they are compensated for any AI-generated content that features their image or voice. The agreement also addresses data privacy, a critical concern in an era where vast amounts of personal information can be used to train AI models. By setting these standards, SAG-AFTRA is helping to create a more transparent and fair environment for its members, which is essential as AI becomes an increasingly integral part of the entertainment landscape.

The SORA 2 legal issues have played a pivotal role in highlighting the need for robust guidelines in AI-generated media. The case, which involved the use of an AI-generated character that resembled a real person, brought to the forefront questions about copyright, consent, and the right of publicity. SAG-AFTRA’s response to this and similar cases has been to advocate for clear and enforceable standards. The union’s efforts have not only benefited its members but have also set a precedent for the broader industry, encouraging other organizations to follow suit.

In addition to the union’s efforts, the NO FAKES Act 2025 is another critical piece of legislation that supports the regulation of deepfake technology. This act aims to address the ethical and legal challenges posed by deepfakes, which can be used to create misleading or harmful content. By supporting new legislation, Hollywood is demonstrating a commitment to responsible AI use, aligning with the Hollywood AI ethics movement. The combination of union agreements and legislative action is creating a more secure and ethical environment for AI in the entertainment industry, one where creativity and technology can coexist without compromising the rights and dignity of performers.

www.jorneyunfolded.pro – beautiful places + booking: «Collected the route and tickets for the evening – comfortable and inspiring». Come!

AI digital doubles copyright

Ethical considerations: Navigating the right of publicity in AI

Beyond the legal battles, the ethical considerations surrounding the right of publicity in AI raise profound questions about identity and consent. As AI digital doubles become more sophisticated and widely used, they challenge traditional notions of how a person’s likeness can be protected and utilized. These digital replicas can perform actions, deliver lines, and even emote in ways that closely mimic the real individuals they represent, leading to concerns about the erosion of personal boundaries and the potential for misuse.

The Screen Actors Guild-American Federation of Television and Radio Artists (SAG-AFTRA) has taken a significant step in addressing these ethical concerns with the SAG-AFTRA AI agreement. This agreement sets industry standards for the ethical use of digital likenesses, ensuring that actors have control over how their images and voices are used in AI-generated content. It includes provisions for compensation, consent, and the right to refuse the use of their likeness in certain contexts, thereby providing a framework that balances the interests of creators and the rights of individuals.

However, the rise of deepfake technology complicates the ethical landscape further. Deepfakes, which are AI-generated videos or images that convincingly depict people doing or saying things they never actually did, blur the lines between consent and exploitation. While deepfake technology has the potential to revolutionize entertainment and media, it also poses significant risks. The ability to create hyper-realistic content without the subject’s knowledge or consent can lead to harmful consequences, such as defamation, harassment, and the violation of personal privacy. This technology challenges the very essence of the right of publicity, as it allows for the unauthorized use of a person’s likeness in ways that were previously unimaginable.

Navigating these ethical dilemmas requires a delicate balance between fostering innovation and protecting individual rights. The NO FAKES Act 2025 is a legislative effort aimed at addressing these issues. This act seeks to regulate the creation and distribution of deepfake content, ensuring that it is clearly labeled as AI-generated and that individuals have the right to seek legal recourse if their likenesses are used without consent. By setting clear guidelines and penalties, the NO FAKES Act 2025 aims to prevent the misuse of AI while still allowing for its creative and beneficial applications.

The ethical use of AI in digital representation is not just a legal issue but a societal one. As the technology advances, it is crucial for stakeholders, including content creators, tech companies, and regulatory bodies, to engage in ongoing dialogue and collaboration. This ensures that the development of AI digital doubles and deepfake technology is guided by principles of respect, transparency, and accountability. The right of publicity in AI is a complex and evolving area, and the decisions made today will have lasting implications for how we navigate the intersection of technology and human rights in the future.

AI digital doubles copyright

Final verdict

So, what does the future hold as we navigate the complex interplay of technology, law, and ethics in the world of AI digital doubles? The rise of these digital replicas has brought with it a host of legal and ethical challenges, particularly in the realm of copyright. The AI digital doubles’ copyright issues, exemplified by the Sora 2 legal issues, highlight the need for clear legal frameworks to protect both creators and the subjects of these digital representations. As the technology continues to advance, the legal landscape must evolve to keep pace, ensuring that individuals have the right to control their digital likenesses and that creators are fairly compensated for their work.

The Deepfake legislation of 2025 is a significant step in this direction, aiming to protect individuals from unauthorized and potentially harmful AI representations. This legislation, along with the NO FAKES Act of 2025, could redefine the way we handle AI training data copyright laws, providing a more robust and nuanced approach to the ethical use of AI. The Hollywood community, recognizing the potential impact of AI on the entertainment industry, has also taken proactive steps. The SAG-AFTRA AI agreement sets industry standards for digital likeness rights, emphasizing the importance of consent and control over AI portrayals. This agreement not only provides a benchmark for fair practices but also signals a broader shift towards responsible AI usage in the media.

Ethical considerations remain at the forefront of discussions surrounding AI digital doubles. Hollywood’s ethical guidelines, which have been shaped by ongoing debates and industry agreements, stress the need for transparency, consent, and the protection of individual rights. As we move forward, it is clear that the intersection of technology, law, and ethics will continue to be a dynamic and evolving field. The challenges we face today are just the beginning, and it will be the collective effort of lawmakers, industry leaders, and the public that will ultimately determine the path we take. In this rapidly changing landscape, one thing is certain: the future of AI digital doubles will be shaped by our ability to balance innovation with responsibility.

AI digital doubles copyright