AI builds AI: 10 Powerful Ways It’s Reshaping Automation

10 Powerful Ways AI Builds AI

In the fast-paced world of technology, few advancements have captured the imagination as much as machine learning, but what if we told you that even the process of creating these intelligent systems is becoming automated? AI builds AI, and this revolution is already underway with technologies like AutoML and neural architecture search (NAS). These tools are not only making machine learning more accessible but are also pushing the boundaries of what’s possible. From automating hyperparameter tuning to generating synthetic data, the future of AI is being shaped by AI itself. Subscribe to the website to stay ahead of the curve and discover how these powerful technologies are transforming the landscape of artificial intelligence.

Mini-review: www.aiinnovationhub.com – I like for lakonik and clear discussions, I come as a «home center» on AI.

AutoML: Simplifying the machine learning process

Imagine a world where the complex and often daunting task of building machine learning models is simplified to a few clicks, thanks to the advent of AutoML. AutoML, or automated machine learning, is a game-changer in the field, making it possible for developers and data scientists to streamline their workflows without needing extensive expertise in model selection and tuning. This technology automates the process of selecting the best machine learning models for a given dataset, significantly reducing the time and effort required to build accurate and efficient models.

One of the most prominent examples of AutoML in action is Google Cloud AutoML. This platform empowers users to create custom machine learning models with ease, even if they have limited experience in the field. By providing a user-friendly interface and powerful backend algorithms, Google Cloud AutoML allows developers to focus on their core business problems rather than getting bogged down in the technical intricacies of model building. This democratization of machine learning is a significant step forward, enhancing accessibility and enabling a broader range of professionals to leverage AI in their work.

Another critical aspect of AutoML is automated hyperparameter tuning. Hyperparameters are the settings that control the learning process of a machine learning model, and optimizing them is crucial for achieving the best performance. Traditionally, this task has been time-consuming and requires a deep understanding of the algorithms involved. With AutoML, however, this process is automated, allowing the system to iteratively test and refine hyperparameters to find the optimal configuration. This not only speeds up the development cycle but also ensures that the models are fine-tuned to the specific needs of the dataset, leading to improved accuracy and reliability.

In addition to model selection and hyperparameter tuning, AutoML also addresses the often-overlooked but vital step of data preprocessing. Data cleaning and transformation are essential for preparing datasets for machine learning, but they can be labor-intensive and require significant domain knowledge. AI-driven data preprocessing tools within AutoML platforms can automatically identify and correct issues in the data, such as missing values, outliers, and inconsistent formatting. This automation reduces the time developers spend on data preparation, allowing them to focus more on model deployment and business insights. By integrating these features, AutoML is transforming the ML pipeline automation landscape, making the entire process more efficient and accessible.

If you need to choose AI-services under business routine, look at www.aiinnovationhub.shop – honest reviews of tools and cases, helps not to waste the budget.

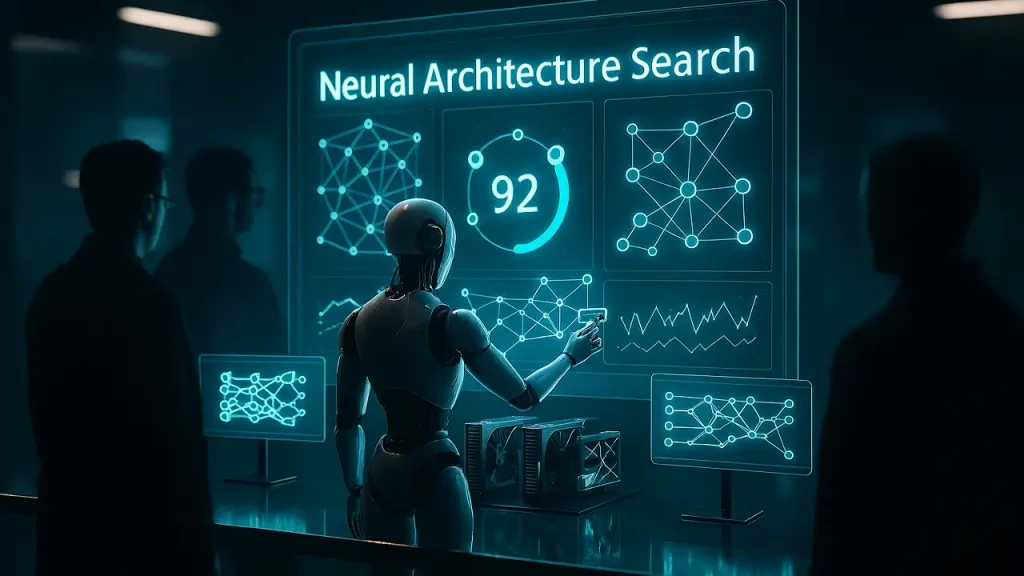

Neural architecture search: Evolving deep learning models

Neural architecture search, a subset of AutoML, pushes the boundaries further by automatically discovering the most efficient deep learning architectures. This process significantly reduces the human effort required to design and optimize models, making it a powerful tool in the machine learning toolkit. By automating the design process, NAS can explore a vast number of architectural configurations, identifying those that perform best for specific tasks. This not only saves time but also ensures that the models are optimized for efficiency and accuracy.

One of the most fascinating aspects of NAS is its ability to mimic natural selection processes. AI-driven models evolve through iterative testing, where weaker architectures are discarded, and stronger ones are refined and combined. This evolutionary approach allows NAS to continuously improve the models, leading to breakthroughs in performance that might be difficult to achieve through manual design. A notable example of this capability is Google’s AutoML-Zero, which has demonstrated the ability to create models from scratch without any human intervention. This showcases the advanced capabilities of NAS and opens up new possibilities for the future of machine learning.

Another significant advantage of NAS is its ability to address real-world data scarcity issues. By generating synthetic data for training, NAS can enhance the robustness and generalizability of deep learning models. This synthetic data can simulate a wide range of scenarios, providing a more comprehensive training dataset than might be available from real-world sources. As a result, models trained with synthetic data are better equipped to handle diverse and complex real-world situations, improving their overall performance and reliability.

NAS integrates seamlessly with AutoML, streamlining the creation of optimized deep learning architectures. This integration allows for a more end-to-end automation of the machine learning pipeline, from data preprocessing to model deployment. By combining the strengths of AutoML and NAS, organizations can accelerate their AI development processes, reducing the time and resources required to bring advanced models to market.

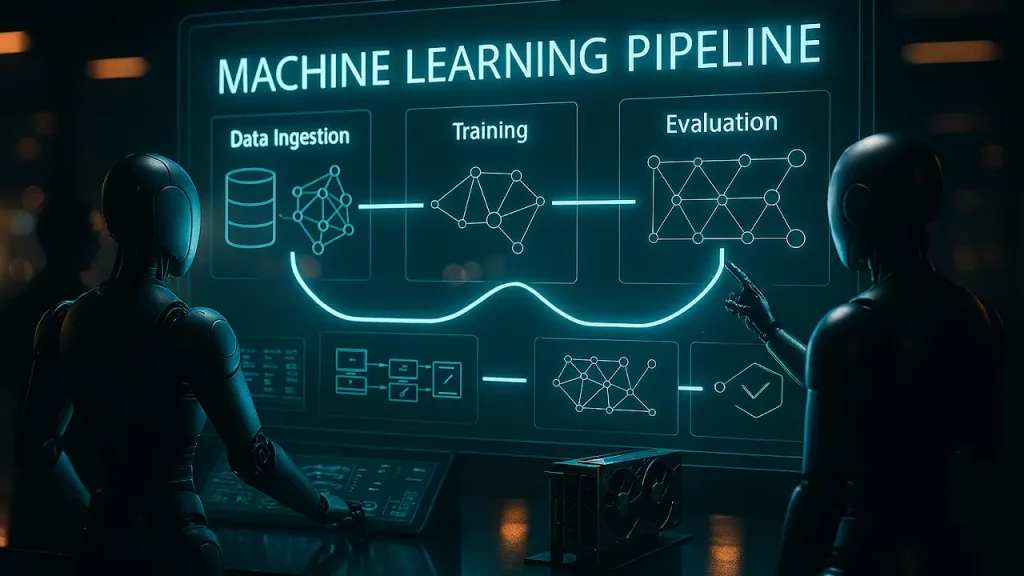

AI agents for machine learning: The next evolution

As we journey deeper into the realm of automated machine learning, the role of AI agents for ML in this process emerges as the next significant leap, promising a more dynamic and adaptive approach. These agents are designed to automate complex tasks, such as feature engineering and model selection, which are typically time-consuming and require extensive expertise. By streamlining the ML pipeline, AI agents not only reduce the workload on data scientists but also enhance the efficiency and accuracy of the models they produce.

One remarkable example of this evolution is Google’s AutoML-Zero. This groundbreaking project demonstrates the capability of AI to create machine learning models from scratch, without any human intervention. AutoML-Zero showcases the advanced capabilities of AI agents, proving that they can not only automate existing processes but also innovate and develop new models. This level of autonomy is a significant step forward, as it opens the door to more sophisticated and adaptable ML systems.

Another critical function of AI agents is automated hyperparameter tuning. Hyperparameters play a crucial role in the performance of machine learning models, and optimizing them can significantly enhance model accuracy. Traditionally, this task has been a manual and often trial-and-error process. AI agents, however, can perform this tuning automatically, using sophisticated algorithms to find the optimal settings. This not only saves time but also ensures that the models are fine-tuned to their best possible performance, leading to more reliable and effective outcomes.

Moreover, AI agents are not isolated entities; they can work collaboratively to share findings and accelerate the learning process. This collective intelligence allows them to build on each other’s successes, rapidly improving the overall performance of the models they create. By sharing insights and data, these agents can refine their approaches and adapt to new challenges more quickly, fostering a continuous cycle of improvement. This collaborative nature is particularly valuable in dynamic environments where data is constantly changing, as it ensures that the models remain relevant and effective over time.

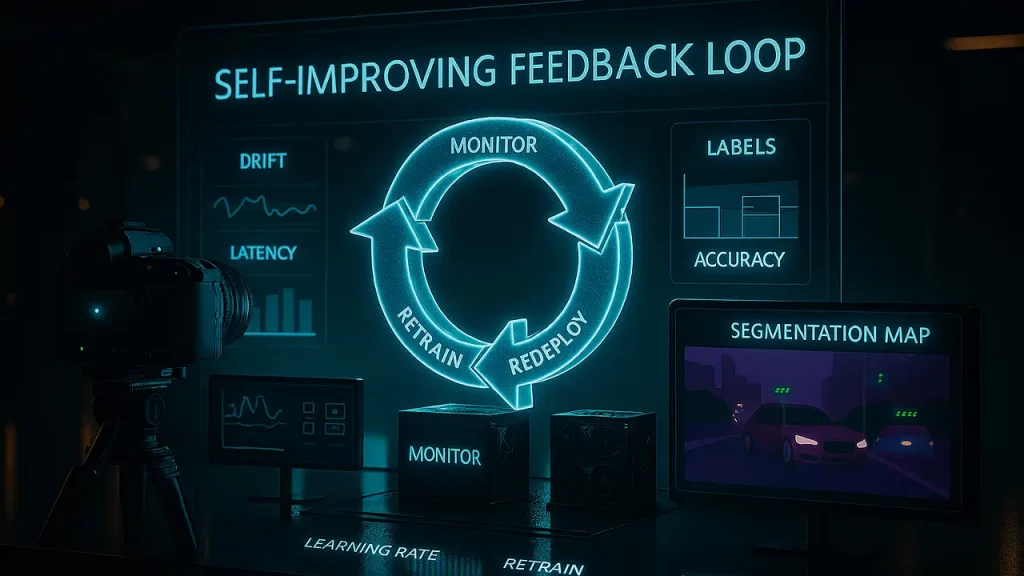

Self-improving AI: The path to autonomous optimization

The concept of self-improving AI, where models can autonomously optimize their performance over time, opens up a new frontier in the quest for true artificial intelligence. This capability is not just about creating more efficient algorithms; it’s about building systems that can adapt and evolve based on their interactions and the data they process. One of the key technologies driving this evolution is automated hyperparameter tuning. By automating the process of adjusting the parameters that control a model’s training, these systems can significantly enhance efficiency and performance, often outperforming manually tuned models.

Self-improving AI systems also benefit from continuous learning, a process where they refine their outputs based on user interactions. This feedback loop is crucial for applications ranging from recommendation systems to natural language processing. As users interact with the AI, the system gathers valuable data that it uses to make incremental improvements. For instance, a chatbot that learns from user conversations can gradually become more contextually aware and responsive, leading to a more seamless and satisfying user experience. This dynamic learning process is a significant step towards creating self-improving AI systems that can adapt to changing environments and user needs.

Another critical aspect of self-improving AI is the reduction in human intervention. Traditional machine learning pipelines require extensive human oversight, from data preprocessing to model training and evaluation. However, with advancements in ML pipeline automation, these processes are becoming increasingly automated. This not only accelerates development cycles but also frees up human experts to focus on more complex and creative tasks. Moreover, the ability of AI to autonomously identify and fix bugs is a game-changer. By continuously monitoring and analyzing system performance, AI can detect issues that might otherwise go unnoticed and implement corrections, thereby improving reliability and user trust. This self-healing capability is essential for maintaining high standards of performance in real-world applications where errors can have significant consequences.

Synthetic data generation: Enhancing training without real data

Yet, the challenge of training these sophisticated models often hinges on the availability of high-quality data, a gap that synthetic data generation for training aims to bridge, offering a promising solution. In many industries, collecting and labeling large, diverse datasets can be prohibitively expensive, time-consuming, or even impossible due to privacy concerns. Synthetic data, generated by AI, can mimic real-world scenarios with remarkable accuracy, providing a robust alternative that enhances the training of machine learning models. This approach not only accelerates the development process but also ensures that models are trained on a wide range of scenarios, improving their performance and reliability.

In the medical imaging field, synthetic data has become a game-changer. Real medical images are often scarce and highly sensitive, making them difficult to obtain and use for training. However, by using synthetic data, researchers and developers can create vast datasets that closely resemble real patient scans. This has led to significant improvements in diagnostic models, enabling them to detect diseases more accurately and efficiently.

For instance, synthetic MRI and CT scans can be generated to represent various conditions, ensuring that AI models are well-prepared to handle a diverse array of medical cases. The ability to generate such data not only accelerates the training process but also helps in creating more inclusive and representative models, which is crucial for equitable healthcare.

The gaming industry is another domain where synthetic data plays a pivotal role. Game developers are increasingly leveraging synthetic data to create more realistic and engaging non-player characters (NPCs). By simulating a wide range of behaviors and interactions, synthetic data allows for the training of AI-driven NPCs that can adapt to player actions and create a more immersive gaming experience. This not only enhances the realism of the game but also reduces the need for extensive manual scripting and testing, streamlining the development process. The use of synthetic data in gaming also opens up new possibilities for dynamic and responsive game environments, where the AI can learn and improve over time, leading to more sophisticated and enjoyable gameplay.

Similarly, the development of autonomous vehicles heavily relies on synthetic data to ensure safety and reliability. Real-world driving scenarios are complex and unpredictable, and collecting enough data to cover all possible situations is a monumental task. Simulated driving scenarios, on the other hand, can be created to represent a wide range of driving conditions, from routine commutes to rare and dangerous situations. This synthetic data allows for extensive testing and validation of autonomous driving algorithms, ensuring that they can handle real-world challenges with confidence. By training on synthetic data, autonomous vehicles can be fine-tuned to recognize and respond to various traffic patterns, weather conditions, and road hazards, ultimately leading to safer and more reliable self-driving cars.

Conclusion

As we wrap up this exploration, it’s clear that the future of machine learning is not just about automation but about creating systems that can evolve and improve on their own. The development of AI builds AI through AutoML has revolutionized the way we approach model creation. By streamlining the machine learning process, AutoML has made it possible for even those with limited expertise to develop sophisticated models. This democratization of machine learning is a significant step forward, as it allows a broader range of individuals and organizations to leverage the power of AI.

One of the most exciting advancements in this domain is neural architecture search (NAS). This technology automates the design of deep learning models, leading to more accurate and efficient architectures. By removing the need for manual tuning and experimentation, neural architecture search (NAS) not only saves time but also ensures that the models are optimized for the specific tasks they are designed to perform. This level of automation is crucial in an era where data is abundant, and the need for precise models is more critical than ever.

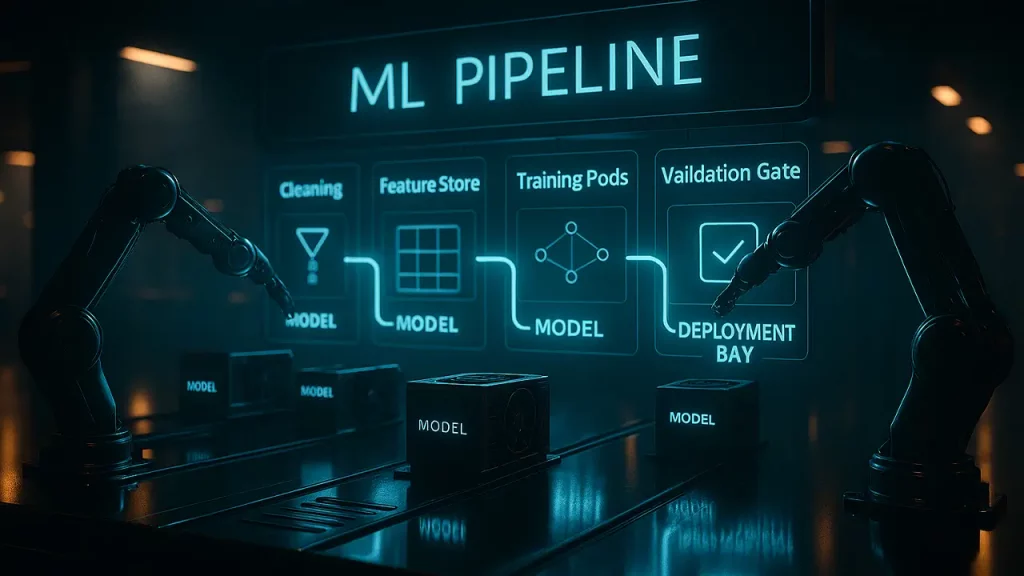

Another key player in this ecosystem is the use of AI agents for ML. These intelligent agents manage the entire machine learning pipeline, from data preprocessing to model deployment. By automating these processes, AI agents for ML reduce the complexity and potential errors that can arise in manual workflows. This not only accelerates the development cycle but also ensures that the models are robust and reliable, capable of handling a wide range of scenarios and data inputs.

The concept of self-improving AI systems takes this automation a step further. These systems are designed to optimize themselves over time, reducing the need for human intervention. Through continuous learning and adaptation, self-improving AI systems can identify and correct their own weaknesses, leading to more accurate and efficient models. This self-optimization capability is particularly valuable in dynamic environments where data and requirements are constantly changing.

Finally, the role of synthetic data generation for training cannot be overlooked. By expanding training datasets, synthetic data generation for training improves the robustness and generalization capabilities of machine learning models. This is especially important in scenarios where real data is scarce or difficult to obtain. The ability to generate synthetic data that closely mimics real-world conditions ensures that models are well-prepared to handle a variety of situations, enhancing their performance and reliability.

In this rapidly evolving landscape, the integration of these technologies is not just a convenience but a necessity. As automated hyperparameter tuning and other forms of ML pipeline automation become more sophisticated, the potential for innovation and impact in fields ranging from healthcare to finance is immense. The future of machine learning is bright, and the journey towards fully autonomous AI systems is well underway, promising a world where technology can truly build and improve itself.

As we wrap up this exploration, it’s clear that the future of machine learning is not just about automation but about creating systems that can evolve and improve on their own. The development of AI builds AI through AutoML has revolutionized the way we approach model creation. By streamlining the machine learning process, AutoML has made it possible for even those with limited expertise to develop sophisticated models. This democratization of machine learning is a significant step forward, as it allows a broader range of individuals and organizations to leverage the power of AI.

One of the most exciting advancements in this domain is neural architecture search (NAS). This technology automates the design of deep learning models, leading to more accurate and efficient architectures. By removing the need for manual tuning and experimentation, neural architecture search (NAS) not only saves time but also ensures that the models are optimized for the specific tasks they are designed to perform. This level of automation is crucial in an era where data is abundant, and the need for precise models is more critical than ever.

Another key player in this ecosystem is the use of AI agents for ML. These intelligent agents manage the entire machine learning pipeline, from data preprocessing to model deployment. By automating these processes, AI agents for ML reduce the complexity and potential errors that can arise in manual workflows. This not only accelerates the development cycle but also ensures that the models are robust and reliable, capable of handling a wide range of scenarios and data inputs.

The concept of self-improving AI systems takes this automation a step further. These systems are designed to optimize themselves over time, reducing the need for human intervention. Through continuous learning and adaptation, self-improving AI systems can identify and correct their own weaknesses, leading to more accurate and efficient models. This self-optimization capability is particularly valuable in dynamic environments where data and requirements are constantly changing.

Finally, the role of synthetic data generation for training cannot be overlooked. By expanding training datasets, synthetic data generation for training improves the robustness and generalization capabilities of machine learning models. This is especially important in scenarios where real data is scarce or difficult to obtain. The ability to generate synthetic data that closely mimics real-world conditions ensures that models are well-prepared to handle a variety of situations, enhancing their performance and reliability.

In this rapidly evolving landscape, the integration of these technologies is not just a convenience but a necessity. As automated hyperparameter tuning and other forms of ML pipeline automation become more sophisticated, the potential for innovation and impact in fields ranging from healthcare to finance is immense. The future of machine learning is bright, and the journey towards fully autonomous AI systems is well underway, promising a world where technology can truly build and improve itself.