Qwen2.5-Omni: How Alibaba Cloud’s Multimodal AI Competes with GPT-4o

Hey, tech enthusiasts! Welcome back to the channel. Today, we’re diving deep into a model that is aggressively changing the game, not just in China, but globally: Qwen2.5-Omni.

When OpenAI dropped GPT-4o, everyone focused on the speed and the “omni-modal” capability. But guess what? Alibaba Cloud just countered with a model that is smaller, open-source, and specifically engineered to run efficiently on everything from massive cloud clusters down to your laptop. If you are an enterprise architect, an AI agent builder, or just someone obsessed with the latest SOTA models, this report is your definitive guide. We’re going to dissect the architecture, look at the brutal performance benchmarks, and figure out the exact cost breakdown. The Qwen2.5-Omni economics are a fascinating study in strategic model design. Let’s jump in!

1. What is Qwen2.5-Omni and Why the Hype?

Defining the End-to-End Qwen2.5-Omni Multimodal Model

The excitement surrounding Qwen2.5-Omni stems from its nature as a unified, end-to-end multimodal system, marking a critical evolution in how AI handles diverse data. Unlike earlier, often slower, cascaded systems that required separate models for different functions (such as a Speech-to-Text model, followed by a Large Language Model, and then a Text-to-Speech synthesizer), Qwen2.5-Omni integrates all these functions seamlessly. This single, cohesive system is built for comprehensive multimodal perception, allowing it to process diverse inputs, including text, images, audio, and videos, while delivering real-time responses through both text generation and natural speech synthesis. This unified pipeline bypasses the latency and error accumulation inherent in handoff points, setting a new industry standard for truly integrated multimodal capability.

The Strategic Focus: Deployable AI

A key strategic choice that distinguishes Qwen2.5-Omni-7B is its optimized size and singular focus on deployability. Despite its compact 7-billion-parameter design, the model is engineered to deliver uncompromised performance and powerful multimodal capabilities. This optimization is crucial because it allows the model to function optimally as a deployable multimodal AI on edge devices such as mobile phones and laptops. This engineering priority targets the massive market for high-volume, cost-sensitive AI applications. By ensuring high performance within a resource-efficient footprint, Alibaba Cloud positions Qwen2.5-Omni as the perfect foundational model for developing agile, cost-effective AI agents, particularly those specializing in intelligent voice applications.

Real-Time Response: The User Experience Metric

The true measure of a successful Qwen2.5-Omni multimodal model in user-facing scenarios is speed. The model is specifically designed to set a new benchmark in real-time voice interaction and intelligent speech generation. The architectural focus on streaming capability ensures that the AI can engage in conversations with minimal latency, eliminating the awkward pauses typical of older systems. This capability is not merely a convenience; it is essential for critical use cases, such as providing real-time audio descriptions to help visually impaired users navigate their environments or offering step-by-step guidance based on video analysis. The emphasis is clearly on creating an instantaneous conversational experience that matches human expectations.

If you’re already exploring Qwen2.5-Omni and other cutting-edge models, you’ll probably want practical tools too. Check out our dedicated marketplace at https://aiinnovationhub.shop/ for AI prompts, templates, plugins and starter kits designed to help you launch, test and monetize your next AI project faster, with less guesswork and more profit today.

2. The Alibaba Cloud Ecosystem and Qwen2.5-Omni’s Position

Integrating Qwen2.5-Omni into Alibaba Cloud’s Generative AI Portfolio

Qwen2.5-Omni is the latest, and arguably most versatile, contribution to Alibaba Cloud’s robust and widely adopted generative AI ecosystem. The company has demonstrated a long-term commitment to advancing AI accessibility by making over 200 generative AI models open-source. This strategy of openness has established goodwill within the developer community, solidifying platforms like Hugging Face and GitHub as primary distribution channels for the Qwen series.

Alibaba’s Open Strategy and Platform Capture

The decision to release high-quality models under open-source licenses serves a dual purpose. Firstly, it immediately allows developers and businesses worldwide to use and customize state-of-the-art technology without initial licensing fees, thereby accelerating innovation. Secondly, as these adopted models scale from development to production, the users often require guaranteed throughput, enterprise-grade tooling, and advanced compute resources, which inevitably leads them back to Alibaba Cloud’s premium services, such as the DashScope API or specialized hardware offerings. This method strategically captures the platform layer by democratizing the foundation model.

The Hierarchy of Qwen Models

To fully appreciate the place of Qwen2.5-Omni in the Alibaba Cloud lineup, it must be compared to its peers: Qwen2.5-Max (the highest reasoning capability model, often MoE-based), Qwen2.5-VL (specialized for Vision-Language tasks), and prior specialized models like Qwen2-Audio. Qwen2.5-Omni represents a massive consolidation effort. Performance metrics show that Qwen2.5-Omni is comparable with the similarly sized Qwen2.5-VL and actually outperforms Qwen2-Audio in pure audio capabilities. This integrated capability eliminates the need for enterprises to manage a complex stack of specialized models.

This consolidation provides significant operational advantages. Organizations can deploy a single, highly efficient 7B model instance, rather than orchestrating multiple specialized models. This simplification reduces VRAM requirements for self-hosting, streamlines the data pipeline, and significantly lowers the complexity of fine-tuning and maintenance, making Qwen2.5-Omni the default high-utility workhorse of the Qwen family.

Deployment Flexibility

The design ensures ubiquity by offering multiple deployment avenues:

- Cloud Access: Instant scaling via Alibaba Cloud’s DashScope API for users prioritizing convenience and elasticity.

- Private Hosting: Direct access to weights on GitHub and Hugging Face for secure, self-hosted deployments leveraging the Apache 2.0 license.

- Edge Implementation: Optimized versions supported by technologies like the MNN Chat App enable powerful multimodal capabilities directly on mobile devices and laptops. This comprehensive deployment strategy caters to every potential user, from cloud startups to highly regulated enterprises.

3. Architecture: Thinker–Talker and the Generative AI Pipeline

The Innovative Design of Alibaba Cloud Generative AI

To understand the speed and stability of Qwen2.5-Omni, it is essential to look beyond the parameter count and focus on its foundational architectural innovation. This model is engineered to solve two fundamental problems in unified multimodal systems: interference between modalities and high latency in streaming inputs.

The Thinker–Talker Dual Architecture

The core of this system is the elegant Thinker–Talker architecture. This framework introduces a necessary separation between the cognitive processes and the generative output processes. The Thinker functions as the large language model, exclusively focused on complex reasoning, instruction following, and text generation. The Talker, conversely, is a dual-track autoregressive model that utilizes the hidden representations generated by the Thinker to produce audio tokens as output.

This separation is crucial because it minimizes interference among different modalities, ensuring high-quality output. By allowing the Thinker to focus purely on the semantic task and the Talker to manage the minute acoustic details of speech synthesis, the model achieves both rapid textual reasoning and superior naturalness and robustness in its voice output. The entire dual system is designed to be trained and inferred in an end-to-end manner, maintaining cohesive operation.

TMRoPE: Handling Time-Sensitive Multimodal Streams

Multimodal inputs, especially video and live audio, are inherently streaming and time-sensitive. A standard LLM architecture struggles to accurately synchronize data arriving sequentially from different input encoders. To address this, Qwen2.5-Omni introduces a novel positional embedding technique called TMRoPE (Time-aligned Multimodal RoPE).

This innovation specifically manages the time alignment of video and audio inputs, which are organized sequentially in an interleaved manner during block-wise processing. TMRoPE ensures that the model maintains accurate temporal context, allowing the alibaba cloud generative ai system to handle complex, long-context inputs—such as analyzing extended video feeds or continuous spoken dialogue—with precise chronological understanding. This capability is paramount for applications requiring continuous monitoring or instruction.

Speed Engineering: Reducing Perceived Latency

The model also incorporates optimizations for speed in the output phase. To ensure that generated audio tokens are decoded in a streaming manner and to reduce the initial package delay (the time before the AI starts speaking), the model utilizes a sliding-window DiT. By restricting the receptive field, this technique guarantees that responses begin almost instantaneously, drastically enhancing the user’s conversational experience and eliminating the disruptive silence often associated with model loading times.

4. Qwen2.5-Omni-7B: Open-Source Heart of the Model

The Significance of Qwen2.5-Omni 7B Open Source Release

The availability of the Qwen2.5-Omni-7B model as an open-source offering is a powerful market disruptor. Alibaba Cloud strategically released the model under the highly permissive Apache-2.0 license. This license is crucial because it allows commercial use, modification, and distribution, without forcing companies to open-source their derivative applications. For regulated industries and enterprises with strict data privacy mandates, this eliminates significant legal and intellectual property friction, making Qwen2.5-Omni a highly attractive foundation for proprietary AI products.

Performance Density in the 7B Design

The choice of the 7-billion-parameter size is not a constraint; it is a point of engineering mastery. The 7B model is designed to maximize performance density, meaning it packs state-of-the-art capability into a small footprint. Despite its size, Qwen2.5-Omni-7B demonstrates high performance on demanding multimodal tasks, including achieving top scores in speech and sound event recognition on the OmniBench dataset. This positions the 7B model in the optimal “utility zone,” providing high function without requiring the massive compute resources or costs associated with 70B+ models.

Democratizing Deployment Through Optimization

Alibaba Cloud has actively pushed the boundaries of hardware accessibility by supporting advanced deployment optimizations.

The release includes 4-bit quantized versions (GPTQ-Int4/AWQ) of the Qwen2.5-Omni-7B model. These quantized models are transformative, maintaining comparable performance to the full version while reducing GPU VRAM consumption by over 50%+. This optimization means the 7B model, which normally requires 24 GB VRAM (FP16), can run effectively with only ~8 GB VRAM in its quantized form. This puts SOTA omni-modal AI inference within reach of single, high-end consumer GPUs (like the RTX 4090, achieving 115 tokens/second) and affordable cloud instances, drastically lowering the entry barrier for advanced AI deployment.

Furthermore, to cater to the absolute edge market, Alibaba also released a Qwen2.5-Omni-3B model. This smaller variant ensures that the Qwen2.5-Omni capabilities can be deployed on even more resource-constrained platforms, leveraging technologies like the MNN Chat App for use directly on mobile devices. This relentless focus on optimizing the qwen2.5-omni 7b open source and its smaller sibling guarantees maximum ubiquity.

5. Real-time Voice: How Natural Is Qwen2.5-Omni’s Speech?

Achieving SOTA in Qwen2.5-Omni Real-time Speech

For any conversational AI to be truly effective, the quality and responsiveness of its voice interaction must be flawless. Qwen2.5-Omni has been specifically engineered to dominate the real-time speech domain through the highly optimized Talker component of its architecture.

Superior Voice Synthesis

The model’s performance metrics in speech generation are remarkable. Data shows that Qwen2.5-Omni’s streaming Talker outperforms most existing streaming and non-streaming alternatives in terms of both robustness and naturalness. This claim is a substantial technical victory. Streaming models are inherently designed for speed but often sacrifice the subtle acoustic quality needed for human-like conversation (natural pauses, correct inflection). By claiming superiority over even slower, non-streaming alternatives, Alibaba demonstrates that the Thinker-Talker separation successfully optimizes for both speed (low latency, streaming start) and high acoustic fidelity. This level of quality makes Qwen2.5-Omni exceptionally suitable for high-stakes voice interactions such as automated interviewing, personalized tutoring, and advanced customer service roles.

Qualitative Parity with Industry Leaders

Beyond technical benchmarks, subjective user feedback confirms the model’s quality. Independent reviews have noted that the voice quality is “actually amazing” and “almost feels very real,” suggesting it is “on par with something like OpenAI’s counterpart”. Achieving qualitative parity with models like GPT-4o Voice, which is currently the benchmark for natural speech, confirms that enterprises do not need to choose between an open-source solution and a premium voice experience. The high degree of perceived naturalness directly translates into higher user engagement and retention in voice-first applications.

Robust End-to-End Instruction Following

The power of Qwen2.5-Omni real-time speech is also measured by the stability of its input processing. The model must reliably convert messy spoken language into structured instructions without degrading its core intelligence. This robustness is confirmed by general cognitive benchmarks. Qwen2.5-Omni demonstrates performance in end-to-end speech instruction following that rivals its effectiveness when dealing with pure text inputs, validated by scores on MMLU and GSM8K. This proves that the multimodal fusion pipeline is robust, ensuring that the model’s ability to handle complex knowledge retrieval or mathematical reasoning remains stable regardless of the input modality. For deploying reliable voice agents in chaotic real-world environments, this input stability is non-negotiable.

6. Qwen2.5-Omni vs. GPT-4o: The Ultimate All-in-One Showdown

A Comparative Analysis: Qwen2.5-Omni vs GPT-4o

The competitive landscape for omni-modal AI is sharply divided between the closed proprietary power of GPT-4o and the resource-efficient, open flexibility of Qwen2.5-Omni. The analysis reveals that the choice between the two often hinges less on absolute benchmark supremacy and more on deployment strategy and economic viability.

The Open vs. Closed Strategic Divide

The most defining difference is the open-source nature of Qwen2.5-Omni vs GPT-4o. GPT-4o is a closed system accessible solely through an API. Conversely, Qwen2.5-Omni-7B is released under the Apache 2.0 license , granting complete control to the deploying entity. For large enterprises, this means the ability to self-host, ensure complete data privacy, fine-tune the weights on proprietary data sets, and perform necessary security audits—capabilities strictly impossible with a closed API.

Reasoning and Performance Trade-offs

While both models achieve SOTA in their respective domains, performance metrics suggest that GPT-4o maintains an advantage in tasks requiring abstract, high-level academic reasoning (like the GPQA benchmark) and complex math (MATH). However, for generalized knowledge (MMLU) and grade-school math (GSM8K), the Qwen family models are highly competitive. This gap suggests that for the vast majority of practical business applications—customer service, summarizing, internal knowledge retrieval, and coding assistance—the reasoning capacity of Qwen2.5-Omni is more than adequate. The slight trade-off in peak academic performance is overwhelmingly compensated by Qwen’s superior flexibility and economic benefits.

Economic Warfare and Operational Cost

The cost component provides Qwen with a decisive strategic edge. The economics of running models at high volume favor open-source, self-hosted solutions. For example, a previous model in the Qwen 2.5 family (14B) was benchmarked as 2.3 times less expensive than GPT-4o-mini for the same workload when run at full capacity. This cost advantage, combined with the option to self-host the highly optimized 8GB VRAM version of Qwen2.5-Omni, means the marginal cost of inference approaches zero after hardware amortization. GPT-4o, while achieving an impressive average response time of 320 milliseconds , carries inevitable recurring API charges that can become prohibitive at massive scale.

Multimodal LLM Comparison: Qwen2.5-Omni vs GPT-4o

This comparison highlights the core differences between the fully open-source Qwen and the proprietary GPT-4o, focusing on accessibility, cost, and raw reasoning power across multimodal tasks.

| Feature | Qwen2.5-Omni (7B/3B) | GPT-4o (Omni) | Competitive Edge |

|---|---|---|---|

| Primary Modalities | Text, Image, Audio, Video (End-to-End) | Text, Image, Audio, Video (Omni) | Tie: Both are true Omni-modal |

| Open Source Status | Fully Open Source (Apache 2.0) | Closed Source (API Access Only) | Qwen2.5-Omni (Control/Privacy) |

| Edge Deployment | Highly Optimized (7B/3B, 4-bit Quantization) | Primarily Cloud/API based | Qwen2.5-Omni (Hardware Accessibility) |

| Response Latency | Optimized for real-time streaming (Talker) | Average 320 ms response time | Highly Competitive (Qwen optimized for streaming start) |

| API Pricing (High Volume) | ~$0.70 / 1M tokens (7B via DashScope) | Competitive, but Qwen 14B shown 2.3x cheaper at scale | Qwen2.5-Omni (Operational Cost Efficiency) |

| High-End Reasoning (GPQA) | Competitive on general tasks | Dominant on complex, academic reasoning | GPT-4o (Raw Intelligence) |

7. Business Scenarios: Qwen2.5-Omni Enterprise Use Cases

Practical Applications of Qwen2.5-Omni in the Enterprise

The unique combination of multimodality, deployability, and cost efficiency ensures that Qwen2.5-Omni is not just a laboratory curiosity but a foundational tool for high-value enterprise use cases.

1. Hyper-Efficient Conversational AI Agents

The model’s core strength lies in its ability to support intelligent voice applications and develop agile, cost-effective AI agents. For contact centers, this translates into next-generation self-service. By coupling real-time voice input and output with the ability to interpret accompanying visual data (if a customer shares a screen or video feed), Qwen2.5-Omni can handle complex troubleshooting and support queries that traditional text-only or lagged voice bots cannot. The naturalness and low latency of the voice responses ensure customer retention and dramatically reduce the need for human agent intervention, leading to significant operational savings.

2. Advanced Accessibility and Real-Time Guidance Systems

The ability of Qwen2.5-Omni to process and synchronize audio, video, and text streams makes it ideal for assistive technologies. The model can provide real-time audio descriptions to help visually impaired users navigate dynamic environments. Similarly, in manufacturing or culinary contexts, it can offer step-by-step guidance by analyzing live video of ingredients or tool use. These use cases heavily depend on the temporal robustness enabled by the TMRoPE architecture, guaranteeing that visual and auditory cues are perfectly aligned for accurate, instantaneous instruction.

3. Structured Data Extraction from Unstructured Multimodal Sources

Modern compliance and quality assurance efforts require analyzing vast amounts of unstructured data, including recorded calls, training videos, and scanned documents. Qwen2.5-Omni excels here, boasting improved comprehension of structured data, such as tables, and the ability to generate reliable structured outputs, especially in JSON format. This capability allows enterprises to feed the model hours of multimodal content (e.g., a call recording + associated screen activity) and receive back a standardized, auditable JSON report detailing key interactions, compliance issues, or required training steps. These Qwen2.5-Omni enterprise use cases drastically streamline auditing processes and enhance operational oversight in regulated industries like finance and healthcare.

8. Pricing, API, and Deployment Methods

Analyzing Qwen2.5-Omni API and Pricing Structures

The commercial viability of Qwen2.5-Omni is cemented by its highly flexible and economically attractive access options, catering to both API consumption and private self-hosting.

Cloud API Access and Aggressive Pricing

Alibaba Cloud offers Qwen2.5-Omni access through its DashScope platform, which includes a convenient OpenAI-compatible endpoint. For the 7B model, the pricing is highly aggressive: approximately USD $0.70 per 1 Million tokens on a pay-as-you-go basis. Furthermore, the DashScope native API offers this same pricing structure along with a free 50,000 token trial.

This low cost per token is a calculated move designed to capture market share quickly. For new projects or small teams, the cost efficiency is paramount, allowing extensive prototyping and low-volume deployment without accumulating high bills. The compatibility with OpenAI’s API structure also means developers can easily route traffic to Qwen2.5-Omni with minimal code changes, lowering the technical barrier to entry.

The Financial Case for Self-Hosting

While the API pricing is competitive, the long-term cost benefits of self-hosting the open-source model are immense, especially for organizations with predictable, high-volume workloads.

The quantized Qwen2.5-Omni-7B models (4-bit GPTQ/AWQ) dramatically shift the economics of deployment, requiring only ~8 GB VRAM. A company can purchase hardware (like a consumer-grade GPU) capable of running this SOTA model at high throughput (115 tokens/second ) for a one-time capital expenditure. Once the hardware is amortized—a process that happens rapidly compared to accumulated API fees for high-volume users—the marginal cost of running the model plummets to near-zero (excluding maintenance and power). This self-host option provides guaranteed control, data sovereignty, and exceptional cost stability, a critical factor for enterprise architects planning years into the future.

Qwen2.5-Omni Deployment Model Comparison (Cloud API vs. Self-Host)

Choosing the right deployment model depends on budget, scale, and latency needs. This table contrasts using a managed cloud service (Alibaba DashScope) with decentralized self-hosting (using quantified models).

| Deployment Model | Access Point | Pricing/Cost Factor | Hardware Requirement (7B) | Key Advantage |

|---|---|---|---|---|

| Cloud API (Pay-as-you-go) | Alibaba Cloud DashScope | ~$0.70 / 1M tokens (Variable cost) | Zero (Managed by Alibaba) | Instant scalability, zero infrastructure overhead. |

| Self-Hosting (Quantized) | Hugging Face (GPTQ/AWQ) | Fixed hardware cost + utility (High upfront cost, low marginal cost) | ~8 GB VRAM | Optimized for low-cost, decentralized edge deployment, high throughput (115 t/s). |

9. Benchmarks and Comparison with Other Multimodal Models

Validating Qwen2.5-Omni Benchmarks Against SOTA

Technical performance is the final pillar supporting the Qwen2.5-Omni value proposition. The model’s success is validated by its performance across a series of unified and specialized benchmarks, proving it is a genuine SOTA contender.

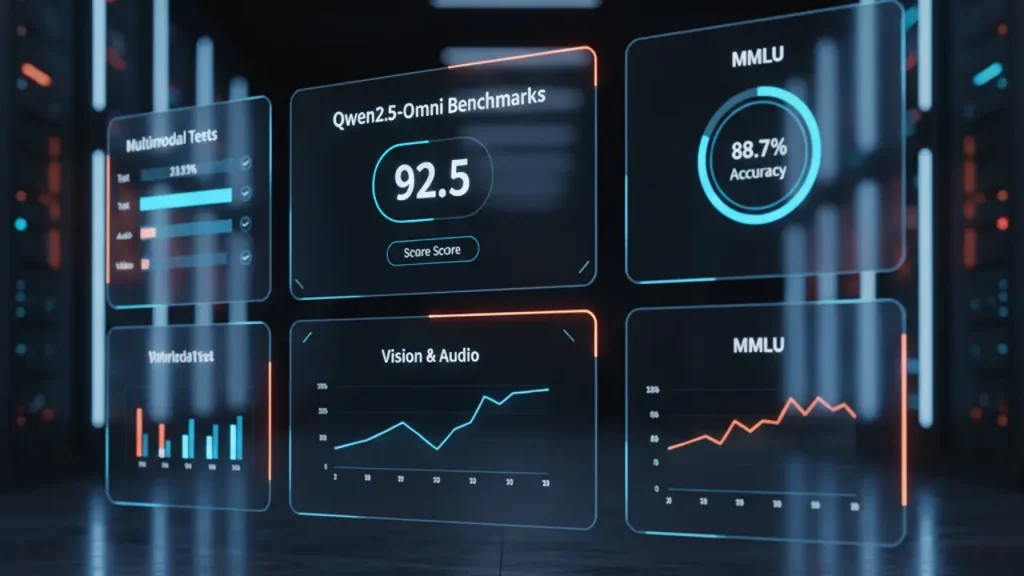

SOTA Performance on Unified Multimodal Tests

To accurately assess its holistic capabilities, Qwen2.5-Omni was evaluated against the Omni-Bench suite, a metric specifically designed to test a model’s proficiency across all fused modalities. The results show that Qwen2.5-Omni achieves state-of-the-art performance on multimodal benchmarks like Omni-Bench.5 This achievement confirms that the architectural innovations—particularly the Thinker-Talker and TMRoPE—are highly effective in enabling robust and accurate reasoning over combined inputs, establishing a new technical bar for unified models in the 7B class.

The model also showcases strong performance in complex cross-modal tasks, such as converting audio-visual input into structured text comprehension. Specifically, Qwen2.5-Omni-7B demonstrated strong scores in the "Multimodality $\rightarrow$ Text" category, achieving an overall average of 56.13% across speech and sound event tasks on OmniBench.4 This technical robustness is crucial for real-world application stability.

Cognitive Stability Across Input Modalities

A key factor in the reliability of a multimodal agent is the consistency of its underlying reasoning regardless of how instructions are received. Qwen2.5-Omni excels in this area. Its performance in end-to-end speech instruction following is validated to be comparable to its capabilities when dealing with pure text inputs, as evidenced by its strong scores on general cognitive tests like MMLU (Measuring Massive Multitask Language Understanding) and GSM8K (math reasoning).3 This means that the complexities of audio transcription and input processing do not degrade the model’s core intelligence, ensuring reliable execution of complex tasks via voice commands.

Establishing the Voice SOTA

In the critical domain of real-time voice, the benchmarks are clear: Qwen2.5-Omni’s streaming Talker outperforms most existing streaming and non-streaming alternatives in robustness and naturalness.5 This makes Qwen the current technical standard in combining high acoustic quality with necessary low latency, positioning it as the top choice for developers building voice-first applications.

Qwen2.5-Omni Multimodal Performance Benchmarks

These key performance results confirm Qwen2.5-Omni's technical standing, demonstrating its robust capabilities across complex academic tasks (MMLU) and crucial real-time applications (Speech Generation).

| Benchmark / Task | Type of Evaluation | Qwen2.5-Omni Performance | Significance |

|---|---|---|---|

| Omni-Bench | End-to-End Multimodal Assessment | Achieves State-of-Art (SOTA) | Confirms unified multimodal excellence and technical lead in integration. |

| MMLU / GSM8K | Academic/Math Reasoning (Via Speech Input) | Comparable to text-only performance | Demonstrates robust reasoning capabilities irrespective of input modality. |

| Speech Generation | Naturalness and Robustness (Streaming Talker) | Outperforms most existing streaming and non-streaming alternatives | Establishes industry leadership in real-time, high-quality audio output. |

10. The Future of Multimodal AI: Qwen2.5-Omni’s Place in the Race

The Direction of Generative AI

The competitive environment—populated by titans like GPT-4o, Gemini, and Claude—is driving rapid technological convergence. The analysis of Qwen2.5-Omni confirms two dominant trends that are reshaping the future of multimodal AI.

1. The Decentralization of Intelligence

The most significant trend championed by Qwen2.5-Omni is the shift toward efficient, open-weight models optimized for decentralized deployment. The model’s design priorities—a compact 7B size, 8GB VRAM quantization, and the Apache 2.0 license—are explicitly aimed at enabling private, on-premise, and edge inference. This focus addresses the growing enterprise demand for data sovereignty and long-term cost predictability. The ability to run SOTA omni-modal AI on low-cost, readily available hardware minimizes reliance on expensive, recurring cloud API calls, fundamentally altering the total cost of ownership for large-scale AI operations.

2. The Dominance of Real-Time, End-to-End Systems

The market has passed the point where latency is acceptable. Both Qwen2.5-Omni and its chief competitor, GPT-4o, have prioritized response speed, realizing that natural interaction requires instantaneous feedback. Qwen’s architectural innovations, such as the Thinker-Talker separation and streaming output decoding, guarantee speed and quality in voice interaction, securing its position as a technical leader in the real-time space. This ensures that the future of multimodal AI is inherently conversational, eliminating the lag that previously hampered user experience.

Final Assessment

Qwen2.5-Omni is a masterful piece of engineering that strategically targets the gap between expensive, closed cloud models and the practical needs of global enterprises. While GPT-4o may retain an edge in the most abstract reasoning tasks, Qwen2.5-Omni offers a superior proposition for deployability, infrastructure flexibility, and cost sustainability. For any organization looking to build high-volume, secure, and customizable AI agents that require seamless multimodal and real-time voice capabilities, the Qwen2.5-Omni-7B open-source model presents the most compelling, long-term strategic choice in the current landscape.

What do you think? Are you ready to deploy the 7B model locally, or are you sticking to the cloud API? Let me know in the comments below! Don't forget to hit that like button, subscribe, and check out more cutting-edge AI reviews right here on aiinovationhub.com!

Related

Discover more from AI Innovation Hub

Subscribe to get the latest posts sent to your email.