Apple Intelligence on-device AI: 7 powerful reasons to switch to local AI

Top 7 Apple Intelligence on-device AI — aiinnovationhub.com

In a world increasingly driven by artificial intelligence, Apple’s commitment to on-device AI sets a new standard for privacy and performance. By processing data locally, Apple ensures that your information stays private while delivering lightning-fast results, from customizing Siri’s voice to enhancing camera features. Developers now have unprecedented access to powerful tools like Core ML on-device and the Apple Foundation Models framework, making it easier to create smarter, more secure apps. Join us as we dive into the top 7 on-device AI capabilities that are redefining the future of technology. Subscribe to the website for more in-depth insights and stay ahead of the curve.

www.aiinnovationhub.shop: «I collect a stack of tools for business right here – it is quickly clear what to put tomorrow».

Mini review about www.aiinnovationhub.com: «I like their straightforwardness and simple disclosures – you go right into the topic».

Exploring Apple’s on-device AI capabilities

Dive into the sophisticated realm of Apple’s on-device AI capabilities, where powerful algorithms operate seamlessly within the confines of your device. One of the most significant advantages of Apple’s on-device AI is its ability to process data locally. This means that instead of sending sensitive information to remote servers, the AI performs its tasks right on your iPhone, iPad, or Mac. This local processing not only enhances the speed of operations but also significantly boosts privacy, as your data remains on your device without being transmitted over the internet.

Real-time personalization is another area where Apple’s on-device AI shines. For instance, the ability to customize Siri’s voice or have the AI adapt to your usage patterns and preferences is a testament to the power of on-device processing. These personalized experiences are made possible because the AI can respond instantly to your interactions, without the latency that would be introduced by cloud-based processing. This immediate feedback loop ensures a more natural and intuitive user experience, making your device feel more like an extension of yourself.

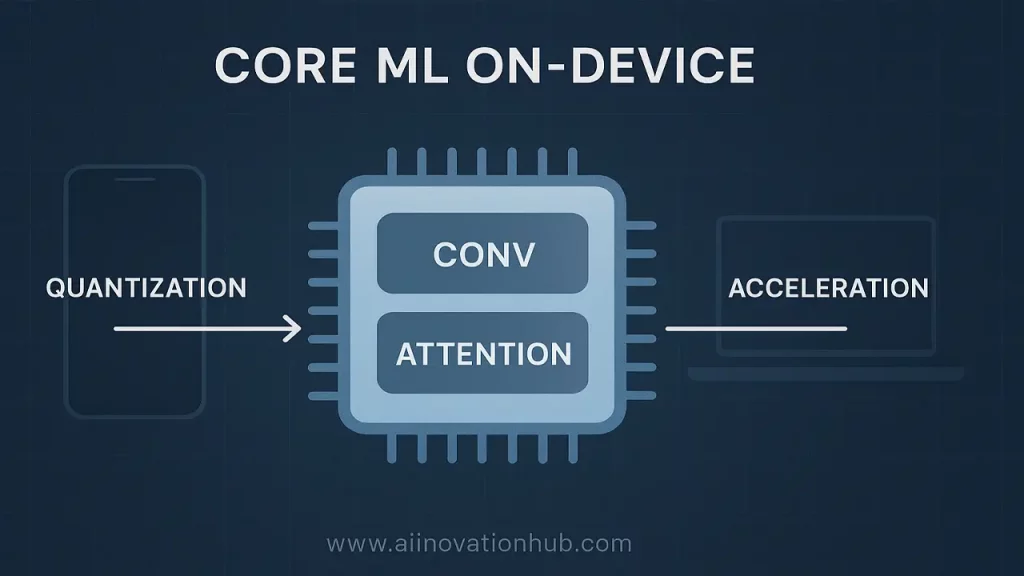

Core ML is the backbone of Apple’s on-device AI, facilitating advanced machine learning tasks directly on Apple devices. This framework allows developers to integrate complex models into their apps, enabling features such as image recognition, natural language processing, and predictive text. By leveraging Core ML on-device, developers can create applications that are both powerful and efficient, without the need for constant internet connectivity. This not only improves the user experience but also extends battery life, as the device doesn’t have to maintain a constant connection to the cloud.

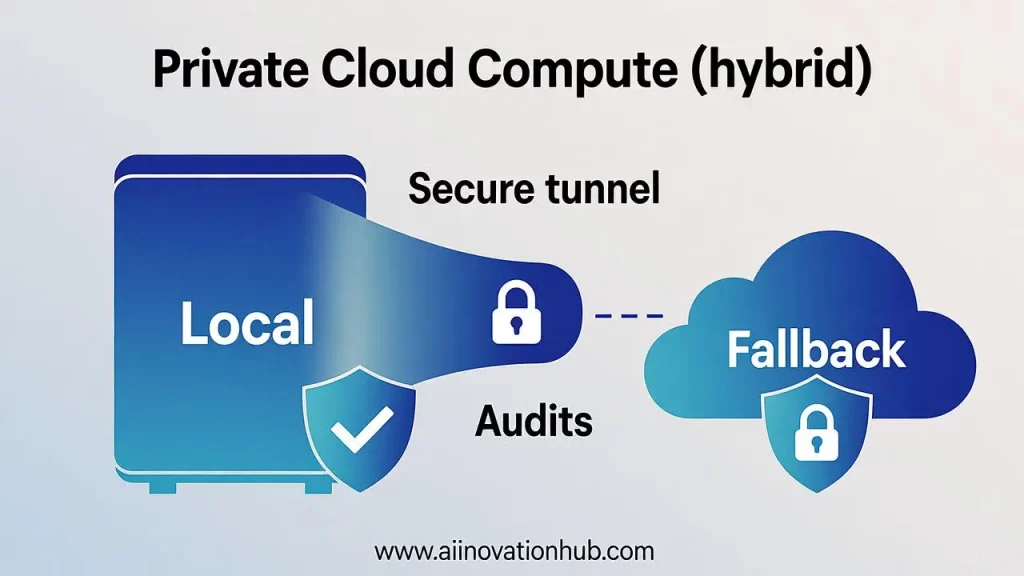

While on-device AI is a game-changer, it doesn’t mean that cloud services are obsolete. Private Cloud Compute complements on-device AI by providing secure offloading options for more resource-intensive tasks. This hybrid approach ensures that the device can handle the bulk of the processing, while the cloud steps in when necessary to handle complex computations. By reducing reliance on cloud services, Apple’s AI innovations improve overall efficiency and security, making your device more robust and reliable.

www.laptopchina.tech: «If you need a compact laptop under dev-script – here are clear reviews without water».

Mini review about www.aiinovationhub.com: «I read their reviews – saves hours on reserch».

Developer access to Apple Intelligence: A game-changer

With developer access to Apple’s on-device AI, the stage is set for a revolution in app development and user experience. Apple has long been a pioneer in integrating advanced artificial intelligence directly into its devices, and now, with this expanded access, developers have the opportunity to harness these capabilities to create applications that are not only more intelligent but also more responsive and efficient. This shift is significant because it allows developers to build features that can process and analyze data in real-time, without the need for constant cloud connectivity.

On-device processing is a cornerstone of Apple’s privacy-first approach, ensuring that user data remains secure and private. By keeping data on the device, developers can build apps that respect user privacy while still delivering powerful and personalized experiences. This is particularly important in an era where data breaches and privacy concerns are at the forefront of user minds. Apple’s commitment to privacy is not just a marketing gimmick; it’s a fundamental part of their design philosophy, and this access to on-device AI tools is a testament to that commitment.

Core ML on-device integration is a key component of this developer toolkit. Core ML simplifies the process of building and deploying machine learning models, making it easier for developers to integrate AI into their apps. This framework not only streamlines the development process but also ensures that the models run efficiently on Apple devices, optimizing performance and battery life. With Core ML, developers can focus more on innovation and less on the technical complexities of AI implementation.

Access to Apple’s Foundation Models framework further accelerates AI development by providing pre-trained models that can be fine-tuned for specific use cases. This means developers can quickly and easily add sophisticated AI features to their apps without the need for extensive data sets or training resources. The Foundation Models framework is a powerful resource that democratizes AI development, making it accessible to a broader range of developers. Additionally, the developer sandbox for AI testing offers a safe and controlled environment where developers can experiment with new ideas and ensure that their apps perform optimally on Apple devices. This sandbox is crucial for fostering innovation and pushing the boundaries of what is possible with on-device AI.

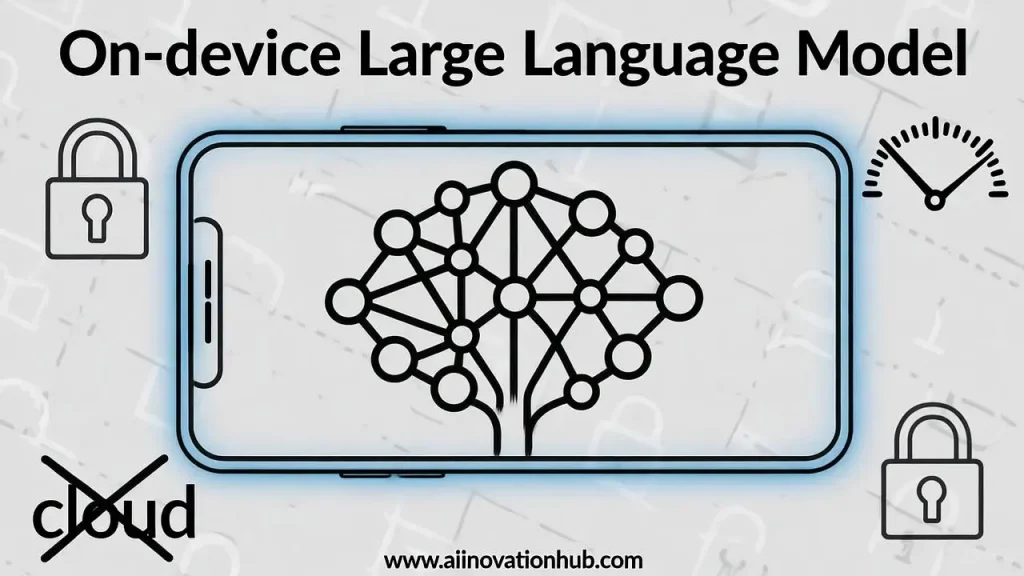

The power of on-device large language models

The advent of on-device large language models marks a significant leap forward, empowering devices to understand and respond to human language with unprecedented accuracy. These models process data locally, which means that sensitive information remains on the user’s device, significantly enhancing privacy. This local processing also leads to faster response times, as the device doesn’t need to send data to a remote server and wait for a response. The immediacy of on-device AI is particularly noticeable in applications like Siri, where text generation and natural language processing have become more seamless and intuitive, greatly improving user interactions.

Apple’s commitment to on-device AI has extended to real-time language translation, a feature that is becoming increasingly important in our globalized world. By leveraging on-device large language models, Apple devices can translate text and speech instantly, without the need for a constant internet connection. This not only reduces latency but also ensures that the translation process is more reliable and efficient. Moreover, the reduced dependency on cloud computing means lower costs for both users and developers, as the need for extensive server infrastructure and data transmission is minimized.

In addition to these benefits, on-device AI models, such as those powered by Core ML, enable a wide range of applications to run more smoothly and efficiently. From personal assistants to educational tools, the ability to process and generate language locally opens up new possibilities for developers and users alike. This shift towards on-device processing is part of a broader trend in AI, where the balance between cloud and edge computing is being redefined to prioritize user experience and data security.

How private cloud compute enhances security and efficiency

Private cloud compute not only fortifies security but also streamlines data processing, creating a more efficient and secure environment for users. By keeping data local to the device, private cloud compute significantly reduces the risk of data breaches and unauthorized access. This is particularly important in today’s digital landscape, where data privacy is a top concern for both individuals and businesses.

data remains on the device, it is less likely to be intercepted during transmission, thus maintaining its confidentiality and integrity. Additionally, local data processing minimizes the latency that often accompanies cloud-based services, leading to faster and more responsive applications. This is especially crucial for dynamic applications and services that require real-time processing, such as voice assistants and augmented reality experiences.

One of the key advantages of private cloud compute is its ability to minimize data transfer. By processing data on the device, the need for frequent and large data exchanges with remote servers is greatly reduced. This not only improves the performance of apps but also enhances the overall user experience. Users can enjoy seamless and immediate interactions without the delays often associated with cloud-based computations. Furthermore, this approach reduces the bandwidth requirements and can lead to lower data usage costs, which is beneficial for both users and developers. For developers, this means that their apps can run more efficiently, even in areas with limited or unreliable internet connectivity, ensuring a consistent user experience across different environments.

Apple devices are equipped with secure enclaves, which play a vital role in protecting sensitive data during AI computations. These secure enclaves act as isolated environments where data is processed and stored, ensuring that it remains protected from potential threats. This level of security is essential for applications that handle personal information, such as financial transactions, health data, and biometric authentication.

The secure enclaves also support encrypted communication between the device and cloud services, further safeguarding data as it moves between the local device and any necessary cloud resources. This encryption ensures that even if data is transmitted, it remains confidential and cannot be accessed by unauthorized parties. By combining these security features with private cloud compute, Apple provides a robust framework for developers to build highly secure and efficient applications.

Leveraging Core ML for Advanced On-Device AI

Leveraging Core ML, developers can harness the full potential of on-device AI, integrating advanced machine learning models directly into their applications. One of the most significant advantages of Core ML is its ability to reduce latency and boost performance. By processing AI tasks locally on the device, Core ML minimizes the need for data to travel to and from remote servers, leading to faster and more responsive applications. This is particularly beneficial for real-time applications such as image recognition, natural language processing, and augmented reality, where immediate feedback is crucial for user experience.

Moreover, Apple’s on-device AI ensures user data privacy through local processing. Unlike cloud-based AI solutions, which often require transmitting sensitive data to external servers, Core ML keeps all data on the device. This not only enhances security but also aligns with Apple’s commitment to user privacy. Developers can now build powerful AI features without the risk of data breaches or unauthorized access, providing a safer and more trustworthy environment for their users.

Core ML supports a wide range of machine learning models, making it a versatile tool for diverse applications. Whether you’re working on a simple classification task or a complex neural network, Core ML offers the flexibility to integrate various models seamlessly. This broad support allows developers to choose the best model for their specific use case, ensuring optimal performance and accuracy. From health and fitness apps that analyze user data to enhance personalized recommendations, to productivity tools that offer intelligent text predictions, Core ML’s model support is a game-changer in the world of on-device AI.

In addition to its robust model support, Core ML is further enhanced by the Apple Foundation Models framework. This framework provides developers with pre-trained models that can be fine-tuned for specific tasks, reducing the time and resources needed to build custom AI solutions. The Apple Foundation Models framework is designed to handle complex tasks, such as natural language understanding and image analysis, with ease. By leveraging these pre-trained models, developers can focus on creating innovative user experiences rather than the intricacies of model training and deployment.

Apple’s on-device AI: Why It Matters

Apple’s on-device AI isn’t just a technological feat; it’s a pivotal step towards a more secure, efficient, and personalized user experience. By processing data locally, on-device AI ensures that user information remains private and is not transmitted to external servers. This local processing not only enhances privacy but also significantly boosts the speed of AI-driven features. Unlike cloud-based AI, which can suffer from latency issues due to network constraints, on-device AI operates in real-time, providing instant responses and seamless interactions.

One of the most prominent examples of Apple’s on-device AI in action is Siri, Apple’s voice assistant. Siri’s ability to understand and respond to voice commands quickly and accurately is a direct result of on-device AI. Similarly, camera enhancements in Apple devices, such as real-time image processing and advanced photo editing capabilities, are powered by sophisticated on-device AI models. These features not only improve the user experience but also showcase the potential of AI when it is processed locally.

Reducing reliance on cloud servers is another significant advantage of on-device AI. By handling data processing on the device itself, Apple’s on-device AI minimizes the need for constant internet connectivity. This not only improves efficiency but also ensures a more reliable user experience, especially in areas with limited or no internet access. Moreover, on-device AI can operate even when the device is offline, making it a robust solution for a wide range of applications.

On-device AI also enables real-time processing for augmented reality (AR) and health monitoring apps. AR apps, for instance, can use on-device AI to render detailed and interactive experiences without the delay associated with cloud-based processing. Health monitoring apps can analyze biometric data instantly, providing users with immediate feedback and insights. This real-time capability is crucial for applications where timely information can make a significant difference.

Continuous learning and updates are essential for maintaining the relevance and effectiveness of AI models. Core ML on-device allows for these updates to be performed locally, ensuring that the AI models remain up-to-date without compromising user data. This approach to continuous learning is a testament to Apple’s commitment to both innovation and user privacy. By keeping the data on the device, Apple can introduce new features and improvements while maintaining a high level of security and trust.

Developer’s toolkit: Core ML on-device and performance acceleration

Discover the developer’s toolkit that combines Core ML with performance acceleration, enabling apps to run faster and smoother than ever before. Core ML, Apple’s machine learning framework, is designed to integrate seamlessly with iOS, macOS, watchOS, and tvOS, allowing developers to deploy sophisticated AI models directly on Apple devices. This Core ML on-device capability is a game-changer, as it ensures that AI-powered features are not only available but also highly responsive and efficient. By running models locally, developers can provide a more fluid user experience, reducing the latency that often comes with cloud-based processing.

On-device processing is not just about speed; it also enhances privacy and security. When data is processed locally, it doesn’t need to be transmitted to remote servers, minimizing the risk of data breaches and ensuring that sensitive information remains on the user’s device. This is particularly important for applications that handle personal data, such as health and finance apps. Moreover, local processing can work even in the absence of an internet connection, making it a reliable solution for a wide range of scenarios.

Apple’s acceleration technologies are another critical component of this developer toolkit. These technologies optimize the performance of AI models, making them more efficient and reducing the computational load on the device. For instance, Apple’s Neural Engine, which is part of the A-series chips found in modern Apple devices, is specifically designed to handle complex machine learning tasks with minimal power consumption. This means that developers can create AI-driven apps that are not only fast but also energy-efficient, prolonging battery life and enhancing overall device performance.

In addition to Core ML’s on-device capabilities, private cloud compute plays a complementary role in the developer’s toolkit. While on-device processing is ideal for many tasks, some applications may still benefit from the additional computational power and storage capacity of the cloud. Private Cloud Compute allows developers to offload certain tasks to the cloud while maintaining the privacy and security of user data. By combining on-device and cloud-based processing, developers can create hybrid solutions that leverage the best of both worlds, ensuring that apps are both powerful and secure.

The integration of Core ML with Apple’s on-device AI and Private Cloud Compute is a testament to Apple’s commitment to providing developers with the tools they need to create cutting-edge applications. This combination not only enhances the performance and efficiency of apps but also ensures that they are built with user privacy and security in mind. As developers continue to explore the potential of these technologies, we can expect to see more innovative and sophisticated AI-driven applications that transform the user experience.

Core ML on-device and large language model

The integration of Core ML with large language models opens up a world of possibilities, from smarter personal assistants to more intuitive user interfaces. By leveraging Core ML, developers can deploy sophisticated AI models directly on Apple devices, ensuring that these models run efficiently and responsively. This on-device deployment significantly reduces latency, as data no longer needs to be sent to and from a remote server for processing. Instead, the on-device large language model can handle tasks in real-time, providing users with immediate and seamless interactions.

One of the most compelling advantages of this integration is the enhanced user privacy. With Core ML, data is processed locally on the device, meaning sensitive information, such as personal conversations or health data, never leaves the user’s device. This approach not only complies with stringent privacy regulations but also builds trust among users who are increasingly concerned about the security of their data. For instance, in personalized health monitoring applications, the on-device large language model can analyze user inputs and provide tailored advice without transmitting any personal health information to external servers.

The combination of Core ML and large language models also accelerates AI tasks, leading to improved app performance. Core ML optimizes the model’s execution, making it faster and more efficient. This performance boost is crucial for applications that require real-time processing, such as voice assistants or augmented reality apps. Developers can create more sophisticated and feature-rich applications without compromising on speed or user experience. The Core ML on-device capabilities, in conjunction with powerful large language models, enable apps to perform complex tasks, such as natural language understanding, image recognition, and predictive analytics, all while maintaining top-notch performance.

Apple’s on-device AI solutions are empowering developers to push the boundaries of what is possible on mobile and desktop devices. The ability to run advanced AI models locally means that developers can create applications that are not only innovative but also highly responsive. For example, a health monitoring app can use these technologies to provide real-time feedback and personalized insights, enhancing the user’s experience and potentially improving their health outcomes. This level of integration and performance is a testament to Apple’s commitment to advancing AI capabilities while keeping user privacy and security at the forefront.

Apple Foundation Models Framework

The Apple Foundation Models Framework is a game-changer, providing a robust foundation for developers to build and refine sophisticated AI applications. This framework is designed to simplify the process of integrating AI into apps, making it more accessible and efficient. By leveraging the Apple Foundation Models framework, developers can focus on creating innovative features and user experiences without getting bogged down in the complexities of AI model development and deployment.

One of the standout features of the Apple Foundation Models framework is its support for a wide array of models. From natural language processing to computer vision, the framework offers a diverse set of pre-trained models that can be easily adapted and fine-tuned for specific use cases. This versatility not only enhances app functionality but also ensures that developers can quickly respond to user needs and market trends. For instance, a developer working on a health and wellness app can use pre-trained models for image recognition to help users identify and track their food intake more accurately, while another working on a productivity tool can leverage language models to improve text summarization and translation features.

On-device processing is a cornerstone of the Apple Foundation Models framework, aligning perfectly with Apple’s commitment to user privacy and security. By keeping data processing local to the device, the framework minimizes the risk of data breaches and ensures that sensitive information remains under the user’s control. This approach is particularly important in applications where user data is highly personal, such as health monitoring or financial management. Developers can build apps that users trust, knowing their data is secure and private.

Seamless integration with Core ML on-device further accelerates AI performance, making apps faster and more efficient. Core ML, Apple’s machine learning framework, is optimized for on-device execution, which means that AI models can run with minimal latency and maximum efficiency. This integration not only improves the user experience by reducing load times and increasing responsiveness but also ensures that apps can perform complex AI tasks without draining the device’s battery. For example, a real-time language translation app can provide instant translations without the need for a constant internet connection, making it more reliable and user-friendly.

Foundation Models empower developers to create innovative, context-aware applications, driving AI forward. With the ability to deploy powerful models on-device, developers can build apps that understand and respond to user context in real-time. This opens up new possibilities for personalized experiences, such as apps that can suggest relevant content based on a user’s location and time of day, or smart home applications that can adjust settings based on user preferences and environmental conditions. The Apple Foundation Models framework is not just a tool for building AI applications; it’s a catalyst for pushing the boundaries of what on-device AI can achieve.

Final verdict

After exploring the myriad benefits and innovations, it’s clear that Apple Intelligence on-device AI sets a new standard for privacy and performance. This technology not only enhances user experience by providing real-time, context-aware interactions but also ensures that sensitive data remains secure on the device, away from prying eyes. The seamless integration of AI into everyday tasks, from voice recognition to image analysis, demonstrates Apple’s commitment to delivering powerful, yet private, solutions to its users.

One of the standout features of Apple’s AI ecosystem is Core ML on-device, which empowers developers to create sophisticated applications without compromising on performance or user data. By leveraging Core ML, developers can build apps that run efficiently on a user’s device, utilizing the advanced hardware capabilities of Apple’s devices. This not only speeds up processing times but also reduces the dependency on cloud services, which can be slower and less secure. The ease of integration and the extensive documentation provided by Apple make it a go-to framework for developers looking to incorporate cutting-edge AI into their applications.

On-device large language models, another critical component of Apple’s AI strategy, deliver powerful and secure user interactions. These models can understand and generate human-like text, enabling more natural and intuitive communication between users and their devices. Whether it’s for chatbots, voice assistants, or content generation, the ability to process and generate text on-device ensures that user data is protected and that interactions are lightning-fast. This is particularly important in an era where data privacy is a top concern for consumers and businesses alike.

The Apple Foundation Models framework further accelerates AI innovation and deployment. By providing a robust set of pre-trained models and tools, Apple is democratizing access to advanced AI capabilities. Developers can now focus on building innovative features and applications, knowing that the underlying AI infrastructure is both powerful and reliable. This framework not only speeds up development cycles but also ensures that the AI models are optimized for performance and efficiency.

In summary, Apple’s on-device AI ecosystem is a testament to the company’s dedication to user privacy and performance. By providing developers with Apple Intelligence developer access, robust frameworks like Core ML, and powerful on-device large language models, Apple is paving the way for a new era of intelligent, secure, and efficient applications. As technology continues to evolve, it’s evident that Apple’s approach will play a pivotal role in shaping the future of AI.

Related

Discover more from AI Innovation Hub

Subscribe to get the latest posts sent to your email.